B2B RFP Response Process: How Teams Win More Deals?

This blog focuses on how B2B teams respond to RFPs in this environment. It breaks down where response efforts typically fail, what high-performing teams do to stay aligned under pressure, and how to build a repeatable RFP response process that improves win rates without exhausting sales, solution engineers, or internal experts.

Responding to B2B RFPs has become one of the heaviest pressure points in enterprise sales. A typical B2B buying decision now involves 13 stakeholders, with 89% of purchases spanning two or more departments. That means a single RFP response must hold up under technical scrutiny, security reviews, procurement checks, legal terms, and commercial evaluation, all at the same time.

As complexity rises, execution becomes the deciding factor. Many capable vendors lose RFPs not because their solution falls short, but because responses arrive late, answers drift across sections, requirements are missed, or coordination breaks down between sales and subject-matter experts. At this stage of the funnel, quality and consistency outweigh clever messaging.

This blog focuses on how B2B teams respond to RFPs in this environment. It breaks down where response efforts typically fail, what high-performing teams do to stay aligned under pressure, and how to build a repeatable RFP response process that improves win rates without exhausting sales, solution engineers, or internal experts.

Key Takeaways

- B2B RFP responses succeed or fail based on execution across intake, qualification, requirement coverage, reviews, and submission accuracy rather than writing quality alone.

- Strong products often lose B2B RFPs due to missed requirements, inconsistent answers, outdated content, and review delays rather than solution gaps.

- Manual RFP workflows do not scale well as deal size, response volume, and cross-functional involvement increase, leading to higher error rates and longer turnaround times.

- AI improves B2B RFP responses when it is applied to context handling, content consistency, and reuse from approved sources instead of simple drafting or speed gains.

- Tracking win rate, time to first draft, time to submission, cost per response, and content reuse rate helps teams identify execution issues and improve response quality over time.

What Is an RFP in B2B?

In B2B transactions, a Request for Proposal (RFP) is a formal document buyers use to evaluate vendors for complex, high-value purchases. It defines the business problem, outlines functional and technical requirements, sets compliance and security expectations, and establishes the criteria for vendor evaluation.

For response teams, an RFP functions as a scoring blueprint. Every question, requirement, and instruction signals what buyers care about most and how decisions will be justified internally. Strong responses treat the RFP as an evaluation framework, not a questionnaire to complete.

What a B2B RFP Typically Includes?

While formats vary by industry and buyer maturity, most B2B RFPs include a consistent set of sections that response teams must address precisely.

- Business context and objectives that explain why the buyer is evaluating vendors.

- Functional and technical requirements are often scored line by line.

- Security, compliance, and legal requirements, especially for enterprise deals.

- Implementation, support, and service expectations.

- Pricing structure and commercial terms.

- Evaluation criteria and decision timelines.

- Submission instructions and formatting rules.

Each of these sections carries a different weight in the evaluation. High-performing response teams prioritize accuracy, consistency, and clarity across all sections to reduce risk for the buyer and improve scoring outcomes.

Where RFP Responses Fit in the B2B Sales Cycle?

B2B buyers use different request formats at different stages of the buying journey. Each format signals how close the decision is and how rigorously responses will be evaluated.

- RFIs appear early in the cycle. They are used to explore the market and understand vendor capabilities. Responses are high-level and informational, with limited scoring or risk scrutiny.

- RFQs come later, once requirements are largely fixed. Evaluation centers on pricing, terms, and delivery models, with minimal room for strategic differentiation.

- RFPs sit at the final gate. At this stage, buyers are reducing risk and preparing to justify a decision internally. Shortlists are small, budgets are allocated, and responses become the primary input for comparison across stakeholders.

What evaluators typically score in RFP responses:

- Alignment to stated requirements.

- Accuracy and consistency across sections.

- Security, compliance, and implementation confidence.

- Clarity that minimizes follow-up questions.

For response teams, this means RFPs demand the highest level of precision. Any inconsistency or generic answer introduces risk at the exact point buyers are trying to eliminate it.

The End-to-End B2B RFP Response Process

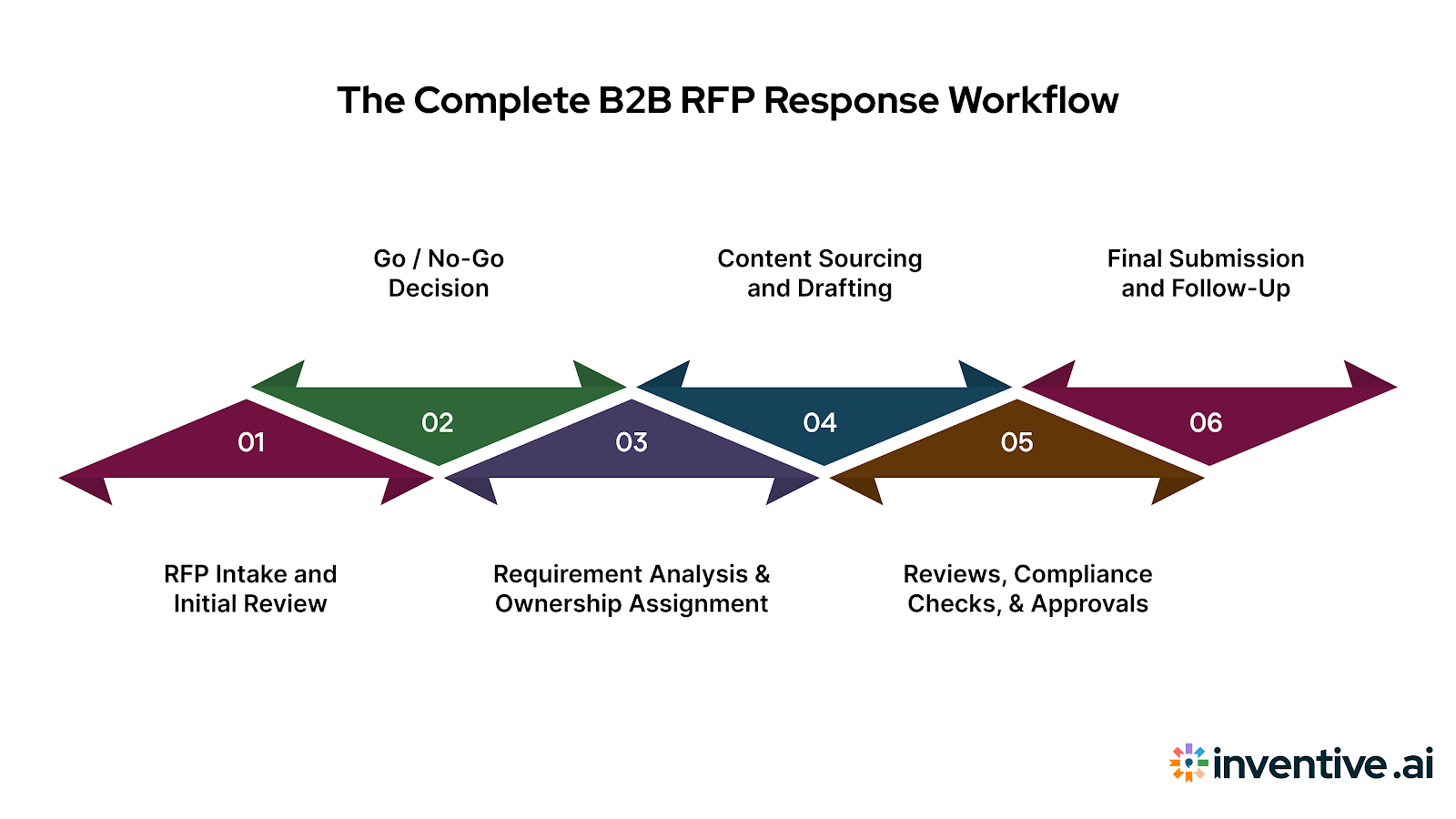

B2B RFP responses follow a predictable sequence of steps, but execution quality varies sharply across teams. The process starts with intake and feasibility checks, moves through decision and execution stages, and ends with submission and follow-up. Each stage has fixed inputs, owners, and outputs.

1. RFP Intake and Initial Review

The intake stage determines whether the RFP can be executed without late-stage disruption. Teams review the document to identify submission rules, mandatory requirements, and constraints that affect scope or feasibility.

This is where disqualification risks and workload size become visible.

Teams typically check:

- Submission deadlines, formats, and portal rules.

- Mandatory technical, security, and legal requirements.

- Required attachments, certifications, and declarations.

- Expected level of customization across sections.

2. Go / No-Go Decision

In B2B RFPs, the go/no-go decision determines the extent of strain on sales, solution engineering, security, legal, and leadership.

Committing to the wrong RFP strains solution engineers, security, and legal reviewers, and often weakens parallel deals by pulling attention and expertise away at the wrong time.

A viable go decision is based on execution reality, not optimism. Teams need to confirm buyer intent, solution fit against mandatory requirements, and internal capacity to respond without shortcuts. RFPs that require heavy exceptions, rushed reviews, or last-minute rewrites tend to fail quietly, even when the product is competitive.

3. Requirement Analysis and Ownership Assignment

Once a decision is made to proceed, the RFP is broken down into individual requirements. Each question, table, and appendix is assigned to a single accountable owner with authority to finalize content.

Execution steps include:

- Mapping every requirement to one owner.

- Identifying cross-functional dependencies early.

- Separating mandatory responses from scored sections.

- Flagging areas that require custom language or evidence.

Content Sourcing and Drafting

Drafting relies on assembling approved content rather than writing from scratch. Teams pull from prior responses, security documents, product materials, and legal language, then adapt them to the buyer’s context.

Key execution points:

- Use validated answers for recurring requirements.

- Adjust terminology and scope to match the buyer’s environment.

- Ensure consistency across technical, security, and commercial sections.

- Avoid introducing claims that require new approvals.

Reviews, Compliance Checks, and Approvals

Reviews occur after complete drafts are assembled. At this point, multiple teams are involved, each focused on different risk areas. Without structure, feedback overlaps or conflicts.

Effective execution involves:

- Assigning named reviewers per section.

- Limiting feedback to accuracy, compliance, and alignment.

- Consolidating edits before applying changes.

- Verifying that updates do not introduce contradictions elsewhere.

Uncontrolled review cycles extend timelines and introduce errors rather than removing them.

Final Submission and Follow-Up

Submission requires verification against the RFP instructions rather than content edits. Teams confirm that all mandatory sections, attachments, and signatures are included and that formatting matches portal requirements.

After submission:

- Clarification requests are tracked and answered consistently.

- SMEs are briefed for follow-up discussions or presentations.

- Finalized content is stored for reuse in future responses.

These steps exist in most RFP workflows, but in B2B environments, they carry more weight. Larger deal sizes, longer sales cycles, and multi-department evaluation leave little room for missed requirements or late corrections. When the response process is not controlled end-to-end, execution gaps become visible to buyers and directly affect scoring and confidence.

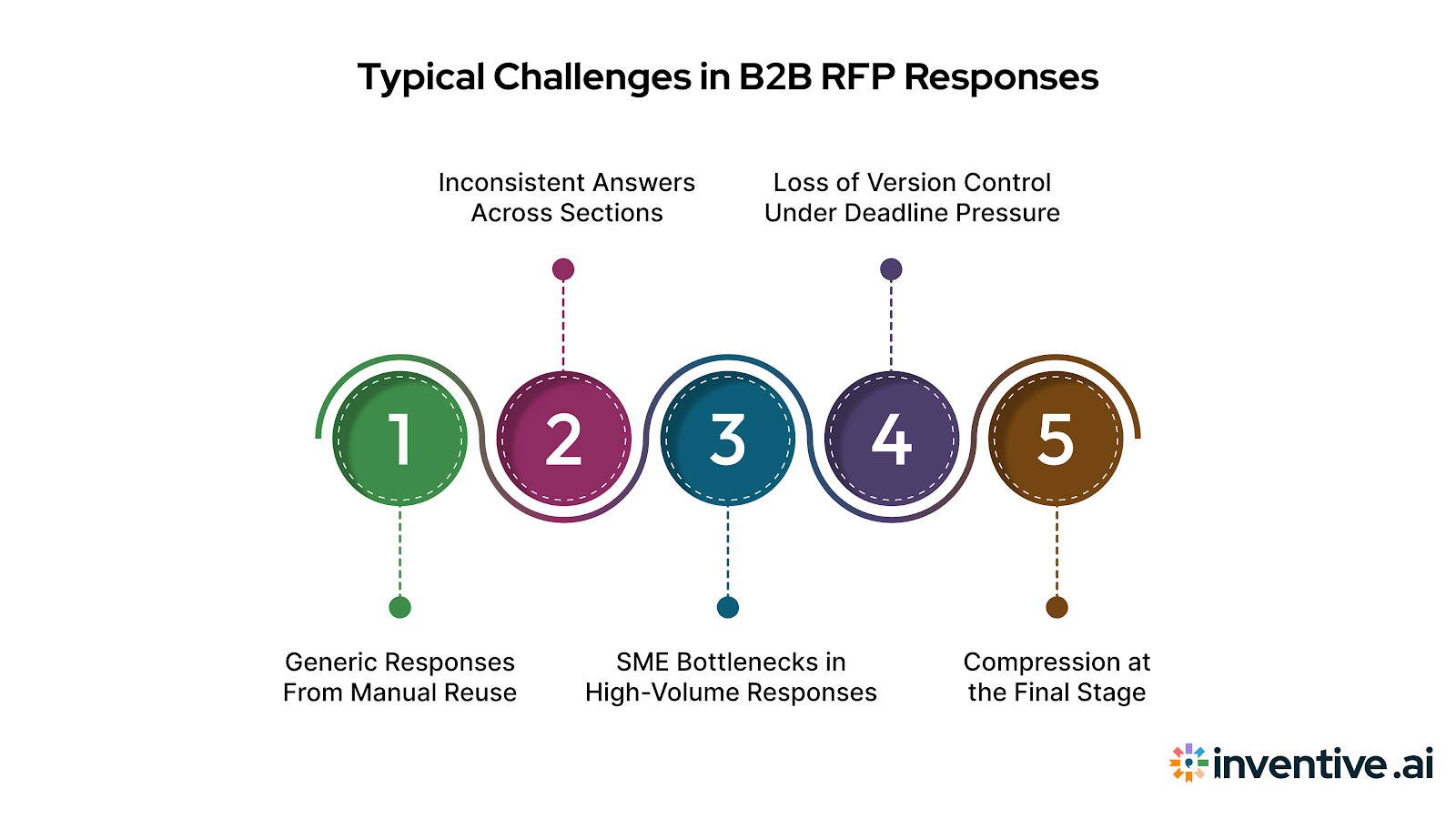

Common Problems in B2B RFP Responses

Most issues in B2B RFP responses are not isolated mistakes. They are structural failures caused by manual workflows operating at enterprise scale. As deal sizes grow and response teams expand, coordination, consistency, and control break down in predictable ways.

Generic Responses From Manual Reuse

Manual reuse relies on shared folders, old proposals, and individual memory. Over time, responses are copied forward without full context, partial updates are made, and assumptions drift.

The result is content that appears relevant on the surface but fails to reflect the buyer’s industry, operating model, or stated priorities. Evaluators read this as low engagement or weak understanding, even when the solution fits.

Inconsistent Answers Across Sections

In B2B RFPs, different teams own different sections. Sales, solution engineering, security, and legal often work from separate source documents. Without a unified response system, terminology, claims, and scope diverge.

Buyers notice when implementation language conflicts with security answers or commercial terms do not align with technical commitments. These inconsistencies directly affect confidence and scoring.

SME Bottlenecks in High-Volume Responses

Manual workflows repeatedly pull the same subject matter experts into near-identical reviews. As RFP volume increases, SMEs become approval chokepoints rather than value contributors. Over time, accuracy erodes, and response timelines stretch.

Loss of Version Control Under Deadline Pressure

Manual collaboration produces parallel drafts, overlapping edits, and unclear ownership. Teams spend time reconciling versions instead of improving responses.

Late-stage merges introduce errors, remove previously approved language, or reintroduce outdated claims. These issues are rarely visible until submission, when correction windows are gone.

Compression at the Final Stage

When earlier steps slip, pressure concentrates at the end of the process. Reviews are rushed, compliance checks are incomplete, and formatting or submission errors appear. These failures are not caused by a lack of effort. They are the result of workflows that cannot absorb B2B-level coordination, review depth, and deadline rigidity.

In B2B evaluations, responses are used as decision records across procurement, legal, security, and executive stakeholders. Manual breakdowns are not just internal inefficiencies. They surface directly in scoring, clarification rounds, and buyer confidence.

This is why many response teams reach a ceiling with manual processes long before RFP volume peaks.

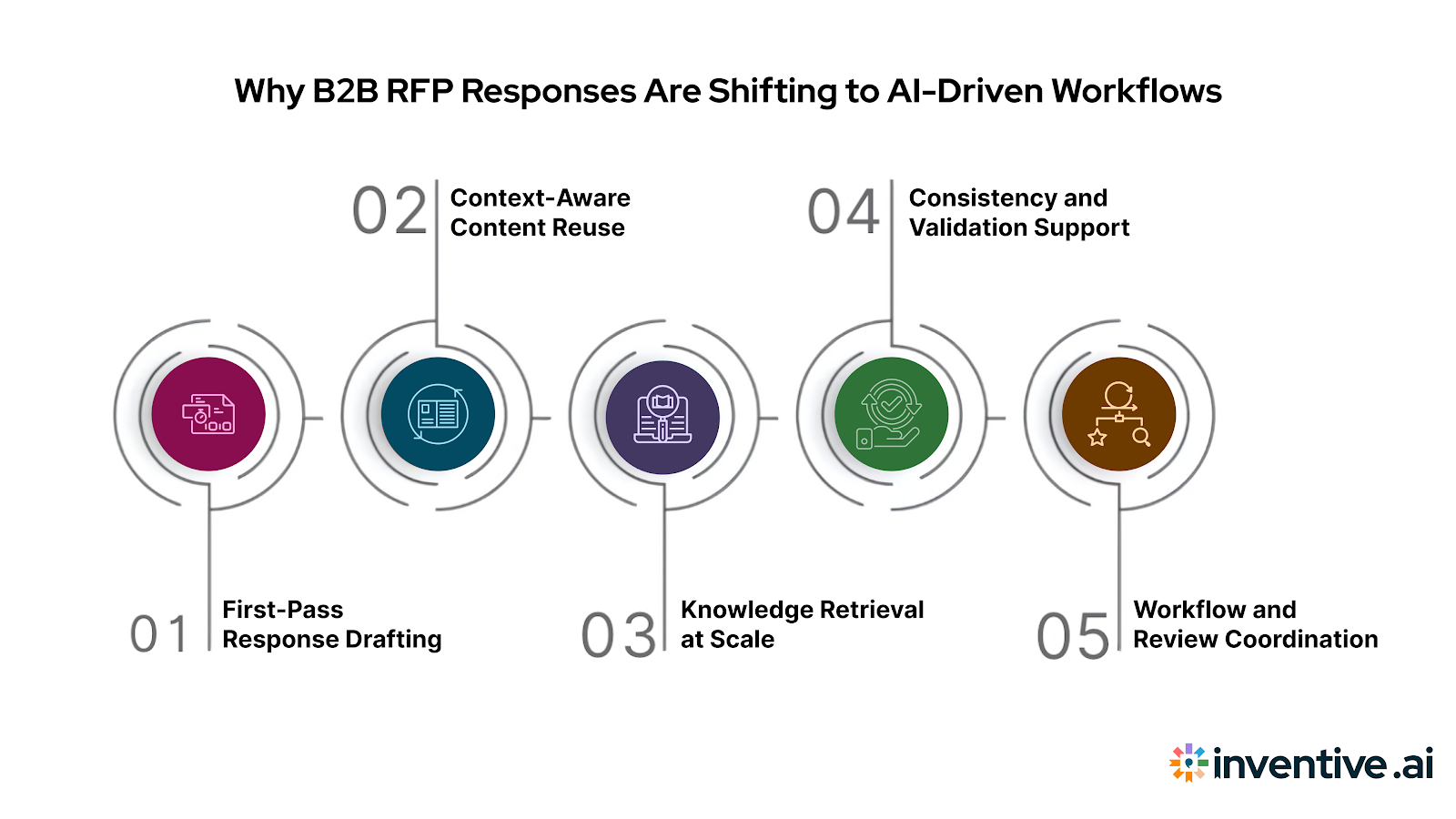

Why B2B RFP Responses Are Moving to AI-First Workflows?

Manual workflows struggle to keep up, especially where responses rely on repeated content reuse, SME input, and cross-team coordination.

This is why AI adoption is rising. 83% of sales teams using AI reported revenue growth this year, compared with 66% without AI, with B2B RFP teams seeing the impact directly in turnaround time, response quality, and win rates.

Below are the specific points in the B2B RFP response process where teams use AI today, and what those systems actually take over from manual workflows.

First-Pass Response Drafting

AI can generate initial drafts for repeatable and semi-repeatable questions by pulling from verified internal knowledge. This reduces the time spent assembling baseline answers and shifts human effort toward review, refinement, and differentiation.

Context-Aware Content Reuse

Rather than keyword matching, modern AI understands intent and context. It adapts responses based on industry, buyer type, compliance scope, and deal size. This reduces the risk of irrelevant or misaligned answers that often result from manual copy-paste reuse.

Knowledge Retrieval at Scale

AI replaces manual searching through folders, spreadsheets, and past proposals. It surfaces relevant, approved content from across historical responses, security documents, and product materials, which directly reduces SME dependency for routine questions.

Consistency and Validation Support

AI helps identify contradictions across technical, security, and commercial sections. This is critical in B2B RFPs, where inconsistencies are penalized and often surface only during late-stage reviews in manual workflows.

Workflow and Review Coordination

AI-supported systems assist with ownership tracking, version control, and review readiness. This reduces back-and-forth during approvals and limits the late-stage compression that causes errors.

Related: Top 25 RFP Software in 2025: Which to Use?

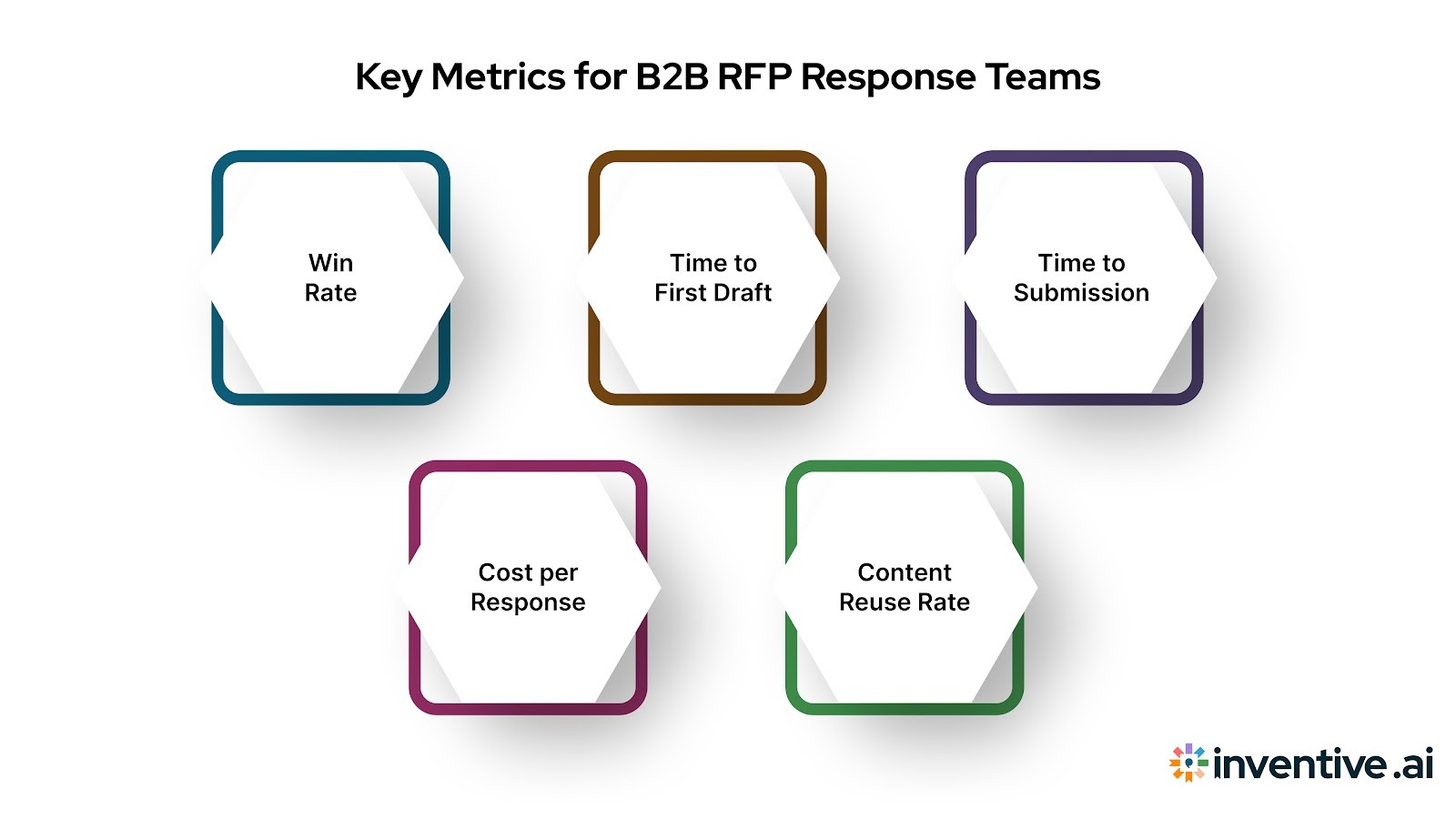

Metrics That Matter for B2B RFP Response Teams

B2B RFP response teams generate large volumes of operational data across sales, solution engineering, security, legal, and proposal management.

Tracking the proper metrics helps teams understand where execution breaks down, which stages consume the most effort, and how response quality changes as volume and complexity increase.

The following metrics reflect measurable outcomes across the full B2B RFP response cycle, not surface-level activity.

Win Rate

Win rate shows whether responses meet enterprise buyer expectations across technical, security, legal, and commercial criteria. In B2B RFPs, drops in win rate usually trace back to misqualification, requirement misses, or inconsistencies across sections rather than weak solutions.

Time to First Draft

This measures how quickly a complete, reviewable response is assembled after RFP intake. In B2B teams, long first-draft timelines indicate manual content sourcing, repeated SME involvement, or fragmented knowledge spread across teams.

Time to Submission

Time to submission reflects end-to-end coordination across sales, solution engineering, security, legal, and approvals. Delays here often come from review congestion, version reconciliation, or late discovery of compliance issues.

Cost per Response

Cost per response captures the total internal effort required to complete an RFP, including senior technical and compliance resources. Rising costs signal that experienced teams are spending time on repeatable work instead of deal-specific evaluation and positioning.

Content Reuse Rate

Content reuse rate shows how much of a response is built from approved, validated material versus net-new writing. In B2B RFPs, low reuse rates indicate weak knowledge control and increase the risk of inconsistent or outdated answers.

Inventive AI: Improving Response Quality in B2B RFPs

Many tools in the market offer AI capabilities layered onto existing RFP workflows. They assist with drafting, searching, or editing, but leave response quality dependent on manual judgment, fragmented content, and late-stage reviews. In B2B RFPs, this approach breaks down quickly because responses are scored across technical, security, legal, and commercial sections, and weak or inconsistent answers cost deals.

Inventive AI operates as an AI-first RFP response platform, where response quality is treated as the primary control variable. Better answers lead to higher win rates, which is why Inventive consistently produces 2× higher-quality responses compared to other tools benchmarked in real RFP environments.

How Inventive AI Drives Higher-Quality B2B RFP Responses?

- Context engine that understands the full RFP: Inventive AI reasons across the entire RFP instead of answering questions in isolation. Technical, security, and commercial answers stay aligned to the same deal context, reducing evaluator confusion and follow-up questions. This directly contributes to higher win rates by improving accuracy and completeness across scored sections.

- Conflict detection across answers: Inventive AI automatically flags contradictory statements between sections before submission. This prevents inconsistencies that often surface during buyer evaluation and reduces confidence, especially in enterprise procurement and security reviews.

- Outdated content detection: Inventive AI identifies stale or non-compliant answers during generation. This reduces manual rewriting and lowers SME involvement, while keeping responses aligned with current product, security, and legal positions.

- Built-in quality benchmarking: Every generated answer is compared against gold-standard content for accuracy, completeness, and clarity. Teams see 95% answer accuracy, with 66% of responses requiring little to no editing, which shortens review cycles and improves time to submission.

- Narrative-style proposal generation: Inventive generates long-form outputs such as executive summaries, security overviews, and proposal narratives, not just Q&A responses. This supports full B2B proposal workflows without additional drafting tools or manual stitching.

- Execution speed without quality loss: By reducing rewriting, review churn, and SME dependency, teams respond 90% faster while maintaining consistency and accuracy, with near-zero hallucinations across submissions.

FAQs About B2B RFP Responses

1. What makes B2B RFP responses different from other sales proposals?

B2B RFP responses are formally evaluated across multiple teams, including technical, security, legal, procurement, and finance. Answers are scored for accuracy, completeness, and consistency, not just persuasion, which makes execution quality critical.

2. Why do strong products still lose B2B RFPs?

Most losses happen due to execution issues, missed requirements, inconsistent answers across sections, outdated content, or weak alignment with evaluation criteria, not because the solution lacks capability.

3. How long should a B2B RFP response take to complete?

Timelines vary by complexity, but delays usually come from manual content sourcing, SME bottlenecks, and review churn. Teams that control these steps reduce time to first draft and submission without lowering quality.

4. What metrics should B2B teams track to improve RFP performance?

Key metrics include win rate, time to first draft, time to submission, cost per response, and content reuse rate. Together, these show whether the response process is scalable and predictable.

5. Can AI improve B2B RFP responses without increasing risk?

Yes, when AI is applied to content retrieval, consistency checks, and response assembly using approved sources. The goal is to reduce manual effort and errors while keeping human control over strategy and final approval.

90% Faster RFPs. 50% More Wins. Watch a 2-Minute Demo.

Tired of watching deal cycles stall due to manual questionnaire back-and-forth, Dhiren co-founded Inventive AI to turn the RFP process from a bottleneck into a revenue accelerator. With a track record of scaling enterprise startups to successful acquisition, he combines strategic sales experience with AI innovation to help revenue teams close deals 10x faster.

After witnessing the gap between generic AI models and the high precision required for business proposals, Gaurav co-founded Inventive AI to bring true intelligence to the RFP process. An IIT Roorkee graduate with deep expertise in building Large Language Models (LLMs), he focuses on ensuring product teams spend less time on repetitive technical questionnaires and more time on innovation.