Machine Learning RFPs and Bids Guide

Learn how to write winning Machine Learning RFP responses with clarity, compliance, and speed. Includes templates, tips, and AI tools to streamline your process.

As machine learning becomes central to everything from fraud detection to logistics optimization, the number of ML-specific RFPs is exploding. But with wider adoption comes higher scrutiny. Buyers no longer just scan for capabilities; they want proof that your models hold up under real-world constraints, comply with policy standards, and won’t break in production.

RFPs are where that trust gets built. Every answer is a test of your team’s technical maturity, delivery clarity, and regulatory awareness. Yet, most vendors still treat these like generic software bids, such as recycling vague claims, overpromising performance, and skipping critical criteria.

This guide walks through what procurement teams actually look for in ML RFPs and how to structure responses that earn trust, score higher, and win enterprise deals.

TL;DR

- Statista projects that the global machine learning market will reach $105.45 billion by 2026, resulting in more RFPs, increased scrutiny, and a sharper focus on verifiable delivery.

- Buyers now expect RFP responses to demonstrate deployment readiness, not just model performance or technical claims.

- Your response must reflect real ML maturity, demonstrating explainability, compliance, and infrastructure fit.

- This guide includes proven response structures, buyer-focused phrasing, and editable templates.

What Is a Machine Learning RFP?

A Machine Learning Request for Proposal (ML RFP) is a formal procurement document used by organizations seeking external expertise to develop or deploy machine learning solutions. It outlines the problem to be solved, the technical and compliance requirements, and the criteria by which vendors will be evaluated.

Unlike general software or IT RFPs, ML RFPs are designed for projects where model performance, explainability, and long-term retraining workflows are central to success.

Machine learning RFPs often appear when the buyer lacks in-house capabilities to build robust ML pipelines or when they need vendor-led innovation for predictive or automated decision-making.

What makes an ML RFP distinct is its emphasis on data access protocols, model evaluation metrics, ethical AI practices (like bias detection or fairness audits), and deployment readiness.

Vendors are expected to address not just the model itself, but the full lifecycle, from data ingestion and training to explainability, compliance, and retraining schedules.

Why Responding to Machine Learning RFPs Matters?

The surge in AI adoption across industries has turned Machine Learning RFPs into a major channel for acquiring ML capabilities at scale. Whether it's marketing teams seeking better audience segmentation, healthcare providers deploying diagnostic models, or customer experience teams automating service workflows, every sector is actively evaluating ML vendors through competitive RFP processes.

Governments, enterprises, and mid-market buyers are no longer experimenting with AI. They’re procuring it, formally, and often urgently. That means vendors who can respond clearly, credibly, and quickly to ML RFPs have a first-mover advantage in a rapidly maturing market.

Data backs this demand.

- According to McKinsey’s 2025 global AI survey, 78% of organizations now use AI (including ML) in at least one business function, with adoption accelerating across core operational areas.

- Statista projects the global machine learning market to hit $105.45 billion by 2025, fueled by adoption in sectors like finance, retail, healthcare, and government.

Several market shifts are driving this trend:

- Digital-first business models require embedded intelligence to stay competitive

- Customer expectations are pushing personalization, speed, and 24/7 support, often powered by ML

- Enterprise buyers are prioritizing tools that enhance decision-making, automate operations, and reduce risk through predictive insights.

Buyers are using RFPs not just to shop for tools; rather, they’re formalizing AI integration strategies, seeking partners who can deliver measurable, secure, and interpretable ML outcomes.

For machine learning vendors, these RFPs represent structured, budgeted opportunities to deliver solutions that meet rising demand for performance, governance, and long-term integration. But what exactly do these buyers ask for? To craft a compelling RFP response, vendors need to understand how ML RFPs are framed, what deliverables are expected, and where they have room to differentiate.

What Buyers Include in a Machine Learning RFP (with Examples)

Machine learning RFPs are not simply IT briefs with “AI” added in. They are structured documents designed to elicit targeted proposals for predictive, adaptive systems, with clear expectations around performance, risk, and integration. To respond effectively, vendors must understand how buyers scope ML requests, express success criteria, and evaluate technical feasibility.

1. Business Objectives: Problem Framing and Outcome Targets

Most ML RFPs begin by defining a specific operational or strategic challenge. This isn't abstract AI exploration; buyers are usually looking to solve a high-impact business problem that existing tools or manual processes can’t address.

Common phrases in this section signal the buyer’s intended transformation:

- “Reduce false positives by 25%” in fraud detection, diagnostic prediction, or compliance alerts

- “Accelerate decision-making in high-volume environments”, such as logistics, underwriting, or customer support.

- “Automated insight extraction at enterprise scale” is often tied to unstructured data like documents, voice, or image.s

These objectives help shape not just model selection but also how you frame impact in your executive summary and case studies.

2. Technical Specifications: Model Type, Architecture, and Deployment

Buyers are increasingly precise in describing how models should behave and how results should be delivered.

RFPs often include requirements like:

- “Use of supervised learning with labeled datasets” to ensure traceability and repeatability

- “Required ROC-AUC > 0.85 on holdout set” to benchmark model performance using a measurable standard

- “Deployment via containerized microservices or REST APIs”, reflecting expectations for integration-readiness

You may also see stipulations for model retraining intervals, latency thresholds, or framework preferences (e.g., PyTorch, TensorFlow, ONNX).

3. Data & Compliance: Privacy, Provenance, and Risk

Unlike traditional software RFPs, ML requests often come with heightened scrutiny around data handling and compliance. Buyers want to mitigate risk at every stage—from model training to real-world inference.

Phrases and expectations might include:

- “Vendor must demonstrate data preprocessing standards”, such as normalization, missing value handling, or augmentation

- “Solution must comply with HIPAA, GDPR, and internal auditability requirements”, especially in healthcare, finance, and government sectors.

- “Data access logs and provenance must be traceable”, ensuring accountability for any model-driven decision.

Each requirement, whether technical, strategic, or regulatory, is a signal of what matters most to the organization: accuracy, explainability, and operational impact.

Next, you’ll need to translate those requirements into a response that builds confidence by showing how your solution aligns with the buyer’s goals, mitigates risk, and delivers measurable value.

Let’s walk through how to respond effectively to an ML RFP, from structuring your response to addressing data, model performance, and compliance expectations.

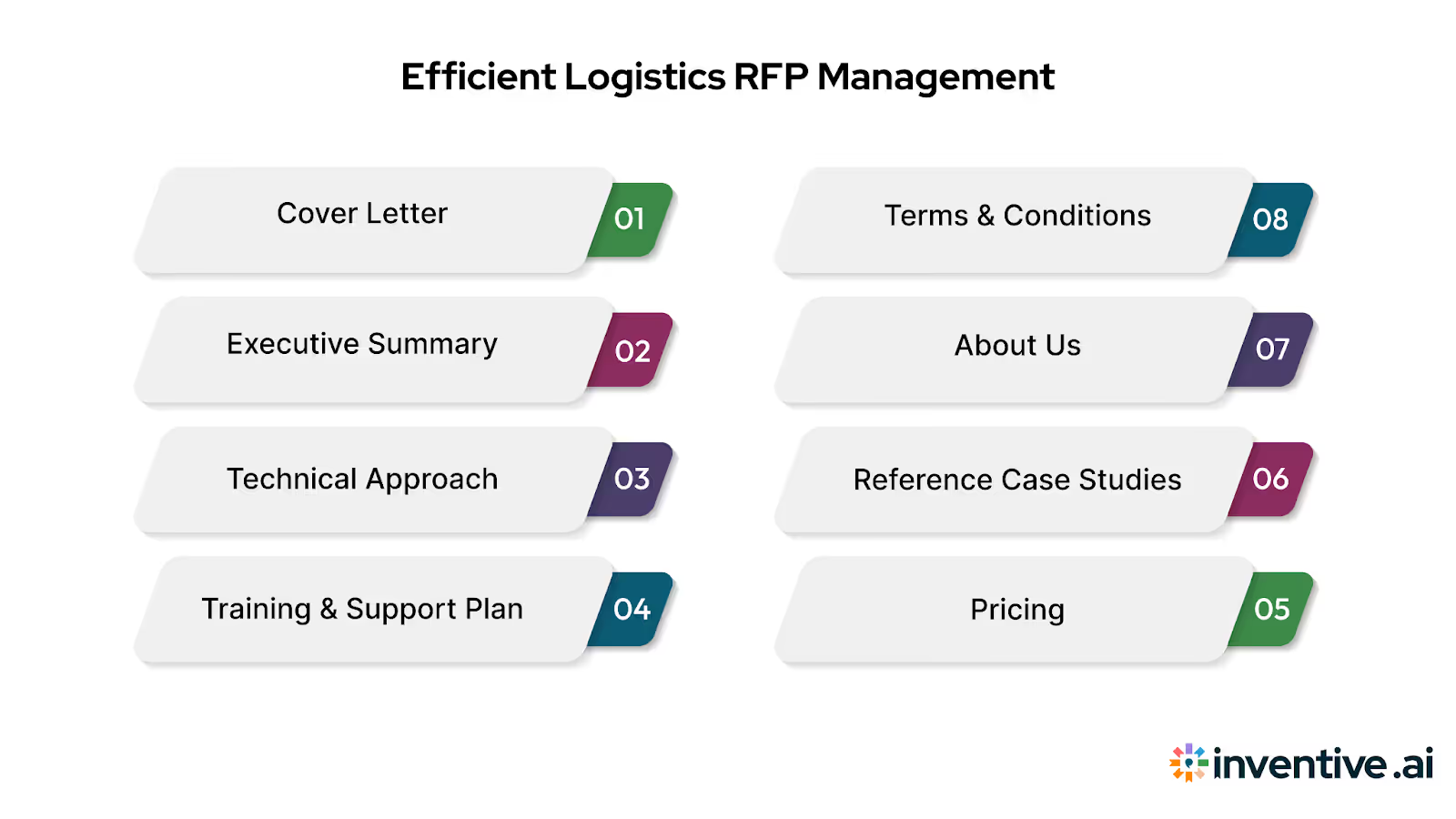

How to Structure and Write a Winning Machine Learning RFP Response (with Templates)

Every section in your response should map precisely to the buyer’s scope. A strong ML RFP response demonstrates your grasp of the business problem, model performance requirements, and lifecycle expectations around deployment, monitoring, and retraining, each of which ties back to the components of an effective RFP response.

The following sections break down what to include and how to frame it in buyer-aligned, decision-ready language.

1. Cover Letter

Use this section to demonstrate domain fit, delivery confidence, and alignment with technical expectations. Reference the ML use case, the buyer’s stated goals, and your experience with similar constraints such as regulatory context, explainability requirements, or retraining cycles.

Avoid generic introductions or buzzwords. This letter should position your team as a capable partner who understands ML delivery, not just ML experimentation.

Cover Letter Checklist:

- Name the ML use case or business objective in the first sentence

- Refer directly to key requirements (e.g., latency, compliance, retraining)

- State your relevance in a single line (e.g., "We’ve deployed ML systems for similar clinical risk prediction in regulated healthcare settings")

- Keep it under 200 words

- Avoid words like “excited,” “honored,” or “innovative” unless the buyer uses them.

- Include the RFP reference number, if applicable

- End with clear contact info and availability

2. Executive Summary

The executive summary gives reviewers a quick read on whether your ML approach aligns with their technical and business priorities. It’s not a marketing section—it’s a strategic alignment statement.

Focus on measurable outcomes, risk controls, and compatibility with their stack or standards.

What to Include in a Strong Executive Summary:

- One-line summary of the use case and ML outcome you’re targeting

- Explicit alignment with buyer KPIs (e.g., precision ≥ 0.92, latency < 200ms)

- Deployment and retraining model

- Risk and compliance alignment, if specified in RFP

- Skip generic AI claims; stick to operational value

3. Technical Approach

This is the core of your response. Buyers look for clarity, replicability, and alignment to their stated metrics and deployment needs.

Explain the model type, data flow, feature engineering, evaluation metrics, retraining frequency, and stack compatibility. Use structure, not narrative—diagrams, flowcharts, or bulleted pipelines are ideal.

What Buyers Expect in the Technical Section:

- Input data types and pipelines

- Model selection rationale and configuration

- Evaluation metrics and validation method

- Deployment architecture (cloud/on-prem/hybrid)

- Retraining workflows and drift handling

4. Training and Support Plan

This section assures the buyer that your model won’t become a black box or an unsupported asset post-deployment.

Detail your onboarding plan, support structure, and retraining cadence. Use phrases like model governance, stakeholder training, and performance monitoring.

What to Include:

- Onboarding steps

- User and stakeholder training

- Retraining frequency and methodology

- Support SLAs

5. Pricing

Buyers expect pricing to reflect both technical transparency and lifecycle cost clarity.

Break down your costs by phase (dev, deployment, support), and specify fixed vs variable components (e.g., per-API-call inference). Use their language: tiered SLAs, model lifecycle pricing, monitoring fees.

6. Reference Case Studies

Case studies should reflect the same risk level, industry, or constraint as the RFP. Buyers are looking for relevance, not just results.

Use this format:

- Problem: Business challenge and ML opportunity

- Solution: Architecture, data type, model, governance

- Result: Metrics + business impact

- Timeline: Time to deploy, retrain, or show value

7. About Us

This section should reinforce trust through experience, credentials, and previous deployments, not company vision.

Include:

- Team qualifications (e.g., published ML researchers, ex-regulatory consultants)

- Domain-specific project examples

- Security certifications, compliance, or clearances

8. Terms & Conditions

Buyers want to know your default contract terms won’t create extra review cycles. Use buyer structure and preempt common objections.

What to Include:

- Retraining and performance warranty terms

- IP and data ownership

- SLA levels for uptime and support

- Risk and liability clauses

Example:

We warrant model performance thresholds for 90 days post-deployment and offer retraining coverage as part of Tier 2 SLA. All code is deployed into the client’s environment, ensuring full IP and data residency compliance.

Read More: Top RFP Response Examples and Template for 2025

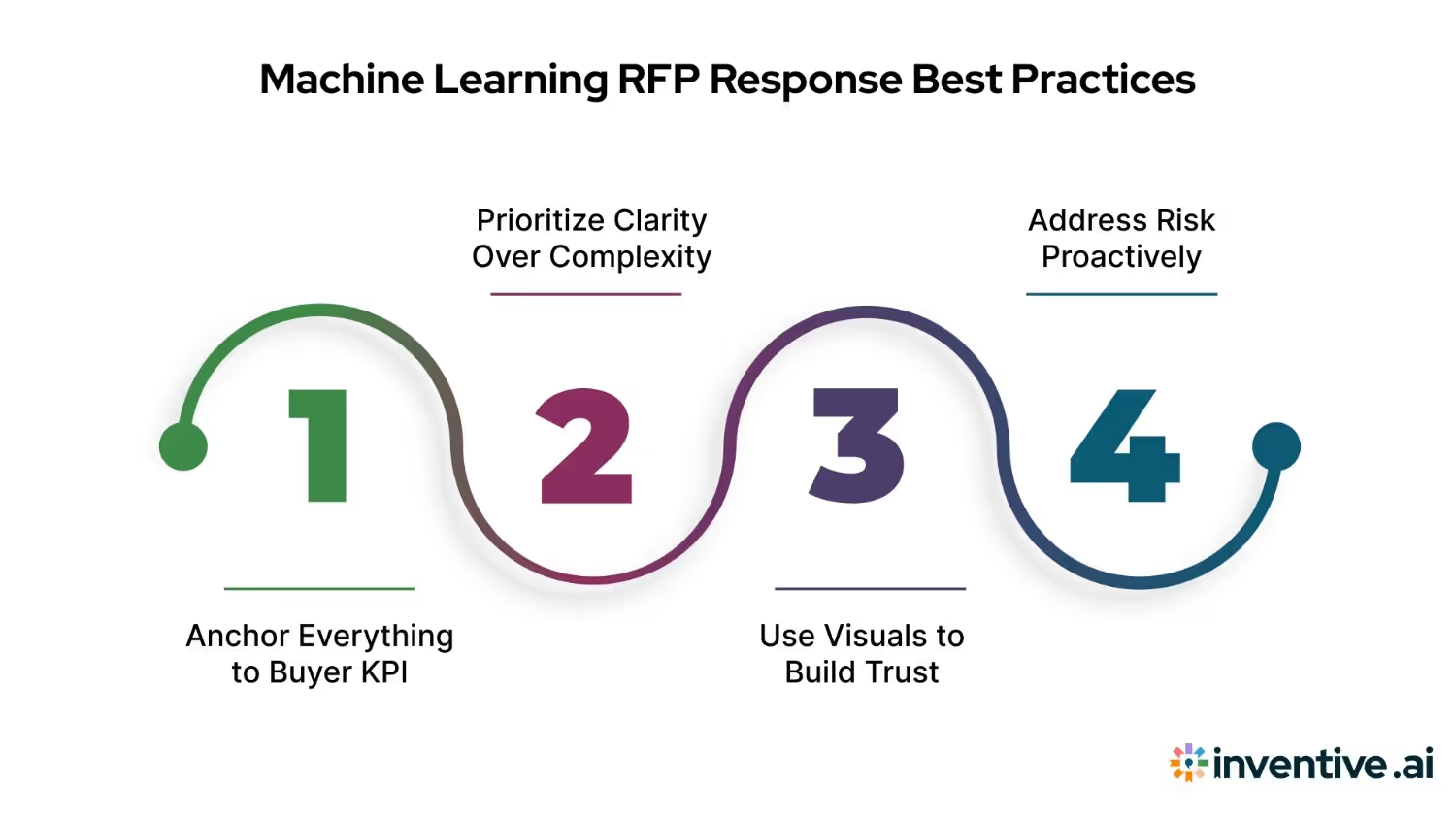

Best Practices for Responding to Machine Learning RFPs

A high-scoring ML RFP response isn’t about showcasing every model in your toolbox. It’s about translating your solution into measurable buyer outcomes—and showing that you understand both the technical and operational context in which it will be used.

1. Anchor Everything to Buyer KPIs

Buyers rarely care about model elegance in isolation. What they want is a system that hits the right metrics under real-world conditions. Whether they’ve asked to “reduce false positives by 25%” or “achieve sub-500ms latency under load,” your response should open with how you’ll get them there.

Use buyer-style language:

- “Our pipeline is optimized for sub-second inference at scale, directly aligning with your SLA requirements for transaction processing.”

- “We target a 12% improvement in recall over your baseline model, based on holdout-set testing with stratified sampling.”

2. Prioritize Clarity Over Complexity

Don’t drown evaluators in jargon. Even technical buyers prefer responses that clarify rather than obscure. Use ML terms, but only in the service of buyer priorities.

Do this:

- “We use an ensemble of tree-based models and neural encoders. Each component contributes to predictive power, while the architecture remains explainable under SHAP analysis.”

Avoid this:

- “Our stack combines XGBoost, BiLSTM, and U-Net in a multi-modal schema with latent attention gates.” (Unless explicitly requested—and even then, explain the why.)

3. Use Visuals to Build Trust

Whenever possible, include:

- Diagrams of your model pipeline

- Performance charts (ROC curves, confusion matrices)

- Error heatmaps or bias detection results

- Deployment timelines in Gantt or swimlane format

These aren't decorative; instead, they build evaluator confidence that your solution is real, tested, and auditable.

4. Address Risk Proactively

Buyers know no model is perfect. What they care about is how you handle edge cases and performance degradation.

Example language:

- “The model triggers human-in-the-loop review for outliers exceeding 3 standard deviations on input features.”

- “Adversarial testing and drift detection are embedded in the CI/CD pipeline, triggering retraining alerts automatically.”

Top Mistakes in Machine Learning RFPs Responses (and How to Avoid Them)

Even technically strong vendors lose points due to avoidable gaps in framing, clarity, or buyer alignment. Below are common missteps and how to mitigate them while responding to Machine Learning RFPs.

1. Overstating accuracy without context: Always specify evaluation metrics on unseen data, using stratified results that reflect class imbalance and real-world thresholds.

2. Using a boilerplate tech stack: Tailor your architecture to the buyer’s infrastructure, down to the cloud provider, deployment environment, and ML platform.

3. Skipping explainability and interpretability: Buyers expect transparency. Include your interpretability approach and demonstrate how outputs will be made trustworthy to non-technical users.

4. Providing vague or rigid pricing: Avoid flat-rate or one-size-fits-all pricing. Offer a scalable structure with clear cost triggers for usage, retraining, and SLA deviations.

5. Failing to address retraining and model lifecycle: Explain how your solution handles drift, updates, and version control, including how failures are detected and rollback is managed.

6. Relying too heavily on technical language: Convert ML terms into buyer outcomes. Focus on impact metrics they care about, efficiency, cost reduction, decision latency, etc.

7. Ignoring post-deployment support and monitoring: Buyers are wary of “hand-off” vendors. Define how you’ll support the model beyond delivery, especially in regulated or high-risk contexts.

How AI Automates Machine Learning RFPs and the Challenges Involved

Responding to Machine Learning RFPs comes with tight deadlines, repetitive formatting, and high-stakes accuracy requirements. AI-powered automation tools are transforming how technical teams manage these responses, enabling them to scale without compromising quality. By streamlining content reuse, tailoring technical details, and ensuring consistency in compliance, vendors can focus on solution depth rather than manual logistics.

Here’s how AI addresses the common bottlenecks in ML RFP responses:

- Response Lag from Manual Drafting: Automation platforms rapidly generate first drafts using pre-approved, domain-specific content. From cover letters to deployment specifications, relevant information is pulled automatically based on RFP context and keywords, reducing turnaround time.

- Inconsistent Technical Narratives: Auto-filled templates ensure uniform articulation of ML architecture, evaluation metrics, and deployment standards across responses. Teams can standardize the presentation of model explainability, retraining schedules, and fairness auditing for every submission.

- High Risk of Compliance Oversights: Built-in prompts for HIPAA, GDPR, or SOC 2 language minimize the chance of missing critical regulatory requirements. Responses can be tagged by vertical, healthcare, finance, or others, and automatically adjusted to align with compliance expectations.

- Static or Generic KPIs: AI can suggest or populate performance metrics that reflect buyer language, such as “target AUC > 0.90” or “latency under 100ms at P95,” drawing from historical RFPs and internal benchmarks.

- Formatting and Reuse Burden: Instead of repeatedly reformatting documents or re-explaining model pipelines, automation enables reusable, version-controlled components that integrate seamlessly with PDFs, portal uploads, Excel sheets, or any RFP format.

Also Read: Top 25 RFP Software in 2025: Which to Use?

How Inventive AI Powers Machine Learning RFP Accuracy

Inventive AI’s AI RFP Agent is designed to streamline your RFP response process, especially for complex, technical domains like machine learning. It automates first drafts, enforces quality, and aligns every answer with the buyer’s context, tone, and infrastructure.

Here’s how it supports machine learning vendors in high-stakes bids:

- AI-Powered Responses With Citations and Confidence Scores: Inventive AI sources content from your internal systems, never the open web, and backs each response with citations and a confidence score, building buyer trust and speeding up internal approvals by ensuring every claim is verifiable.

- Highly Contextual Responses With AI Context Engine: The Context Engine pulls insights from the RFP, past meetings, buyer intel, and your own notes, so every reply reflects the opportunity’s nuances, saving rewriting time and ensuring stakeholder-specific messaging that drives faster buy-in.

- Full Tone & Style Control: Shape the voice to fit the deal. Whether the buyer wants a technical specification or an executive summary, proposal writers can instantly adjust the tone, structure, and detail level without starting over, saving rewriting time and ensuring stakeholder-specific messaging that drives faster buy-in.

- Unified Knowledge Hub With Real-Time Sync: Inventive AI pulls approved content from tools like SharePoint, Confluence, Notion, and Google Drive, turning scattered documentation into a single, searchable source, reducing duplication of effort and ensuring teams always work from the most accurate, up-to-date content.

- AI-Powered Conflict & Content Management: Inventive AI flags conflicting or stale responses, monitors content health, and suggests updates automatically, protecting brand credibility by preventing errors before submission.

- AI Agents for Competitive and Strategic Support: AI agents help your team brainstorm win themes, identify white space, and analyze how competitors pitch similar ML capabilities, enhancing your competitive edge and increasing the likelihood of standing out in crowded bids.

- Built-In Collaboration for Cross-Functional Teams: Coordinate legal, engineering, sales, and proposal reviewers in one place. Inventive AI offers real-time editing, Slack integration, and permission-based access, so teams can contribute without stepping on each other’s work, accelerating proposal timelines and enabling seamless coordination across stakeholders.

Together, these capabilities make Inventive AI’s RFP Agent a trusted partner for ML teams, helping you respond faster, differentiate clearly, and win more of the right deals.

Conclusion

Machine learning isn’t a niche discipline anymore; it’s at the core of national infrastructure, healthcare, defence, and enterprise systems. As AI adoption accelerates, so does scrutiny. Buyers want more than performance benchmarks. They want provable compliance, clarity of integration, and confidence that your models won’t break in the real world.

That’s why your RFP responses can’t just be fast; they need to be verifiable, contextual, and strategic. Every answer is a reflection of how seriously you treat security, governance, and results.

Inventive AI’s AI RFP Agent is built to meet that moment. It helps ML teams respond 2× faster while boosting win rates by 50%, with answers grounded in your approved content, styled to your audience, and aligned to the deal.

Secure your next Machine Learning opportunity, book a demo today.

FAQs: Machine Learning RFPs

1. What makes an ML RFP different from a typical software RFP?

ML RFPs go beyond functionality—they require detailed explanations of data pipelines, model governance, explainability, bias mitigation, and ongoing performance monitoring. Buyers want to see both innovation and control.

2. How do buyers evaluate ML responses?

Most evaluation frameworks weigh technical fit, risk mitigation, compliance, and clarity. Vague or overly academic responses tend to score lower. Clear architecture diagrams, real-world use cases, and explainable model behavior are critical.

3. What kind of compliance should ML vendors showcase in RFPs?

Common requirements include data privacy (GDPR, HIPAA), model auditability, reproducibility, and alignment with ethical AI guidelines. Demonstrating adherence to ISO/IEC standards or the NIST AI Risk Management Framework can add credibility.

4. How detailed should performance metrics be in an ML RFP?

Buyers expect specifics: training vs inference latency, model accuracy on benchmark datasets, false positive/negative rates, and how those metrics were validated. Vague claims without validation can disqualify your bid.

5. Can Inventive AI help with both technical depth and regulatory clarity?

Yes. Inventive AI pulls directly from your internal docs, past submissions, and compliance records to generate technically sound, audit-ready responses. It balances speed with accuracy, helping you submit with confidence, not guesswork.

90% Faster RFPs. 50% More Wins. Watch a 2-Minute Demo.

Knowing that complex B2B software often gets lost in jargon, Hardi focuses on translating the technical power of Inventive AI into clear, human stories. As a Sr. Content Writer, she turns intricate RFP workflows into practical guides, believing that the best content educates first and earns trust by helping real buyers solve real problems.

Tired of watching deal cycles stall due to manual questionnaire back-and-forth, Dhiren co-founded Inventive AI to turn the RFP process from a bottleneck into a revenue accelerator. With a track record of scaling enterprise startups to successful acquisition, he combines strategic sales experience with AI innovation to help revenue teams close deals 10x faster.