Examples of Tender Response Automation Techniques

Discover examples of tender response automation. Explore AI pre-qualification, smart responses, and compliance checks. Click to learn more!

Most proposal teams are overwhelmed, not because they lack expertise, but because every RFP demands too much, too fast. Buyers now expect tailored responses, proof of capability, and faster delivery. Meanwhile, teams are buried in version control issues, outdated content libraries, and endless coordination with SMEs.

Without automation, this manual grind becomes a bottleneck: slowing down response times, increasing error rates, and forcing teams to choose between quality and speed.

It’s no surprise then that 70% of organizations are already piloting automation technologies, according to McKinsey. Tender response is emerging as one of the clearest use cases, with measurable wins in speed, accuracy, and resource efficiency.

This blog explores how automation is transforming tender response workflows. We’ll cover real-world use cases, key features to look for, and an implementation roadmap for introducing AI-driven tools like Inventive AI into your process.

TL;DR

- Manual tender responses drain time, stretch SME bandwidth, and delay qualification decisions.

- AI agents offer automation capabilities far beyond basic generative AI tools.

- This blog includes real-world examples of how teams are automating key steps.

- You’ll also get a practical implementation roadmap to help you get started fast.

What is Tender Response Automation?

Tender response automation refers to the use of technology to streamline how teams manage formal bid submissions, whether for RFPs, RFIs, or public sector tenders. It helps reduce the time spent on repetitive tasks like formatting, boilerplate content insertion, and document tracking, allowing response teams to work more efficiently at every stage.

Traditional automation relied on static tools like templates, macros, and basic workflows. These helped enforce consistency, but couldn't adapt to the nuances of each tender. The introduction of AI expanded what's possible. AI-powered automation can interpret requirements, surface relevant past content, and generate context-aware draft responses, significantly reducing manual effort in content creation.

Now, AI agents represent the next step in this evolution. Rather than focusing solely on drafting, AI agents assist across the entire tender response cycle. They can evaluate opportunity fit, map buyer requirements to internal capabilities, flag compliance gaps, and even highlight competitor language patterns. Their role is not just generative; it’s collaborative, offering intelligent support throughout the full response process.

Why Manual Tender Response Holds You Back

Manual tender response workflows may have worked in slower, lower-volume environments, but today, they no longer match the pace, scale, or complexity of competitive bidding. Below, we break down the core stages of the tender process and where manual handling creates risk or inefficiency.

1. Opportunity Qualification Depends Too Heavily on Judgment

The first step in any response is deciding whether to pursue the opportunity. In manual workflows, this decision often relies on gut feel, fragmented team discussions, or past experience, rather than structured evidence.

Without a consistent qualification framework, teams either stretch to chase poor-fit bids or miss opportunities they could realistically win.

2. Requirement Analysis ("Shredding") Is Slow and Inconsistent

Shredding involves breaking down complex tender documents into manageable elements like scoring criteria, mandatory requirements, eligibility checks, and technical deliverables.

Manually doing this across long documents is not only time-intensive but also error-prone. Key obligations can be overlooked, duplicated, or interpreted inconsistently across team members.

3. Content Gathering and Reuse Is Fragmented

Once the team understands what's needed, the next challenge is finding the right content. But in most manual setups, past responses are scattered across drives, inboxes, and outdated folders.

Writers spend hours digging for old bids, rewriting content from scratch, or repurposing answers without knowing whether they were successful in previous submissions.

4. Subject Matter Experts Are Pulled into Low-Value Work

Subject matter experts (SMEs) are often pulled into tender responses at the wrong time and for the wrong reasons. Instead of validating high-level messaging or strategy, they’re asked to produce content from scratch, review formatting issues, or manually adapt boilerplate text. This slows down the process and creates bottlenecks that affect the entire team.

5. Most Manual Responses Are Reactive, Not Strategic

Manual processes naturally push teams into reactive mode. By the time content is assembled and reviews begin, there’s little bandwidth left to tailor language to the buyer, align to scoring models, or differentiate from competitors.

Each submission becomes a rushed production effort, not a strategic sales document.

Manual tendering may get the job done, but it drains time, duplicates effort, and often leaves teams reacting instead of leading. As bid volume grows and expectations rise, response teams need more than just experienced people; they need smarter systems that can support each stage of the process. That’s where AI-driven automation comes in.

In the next section, we’ll break down real-world techniques that demonstrate how AI is reshaping the tender response process from start to finish.

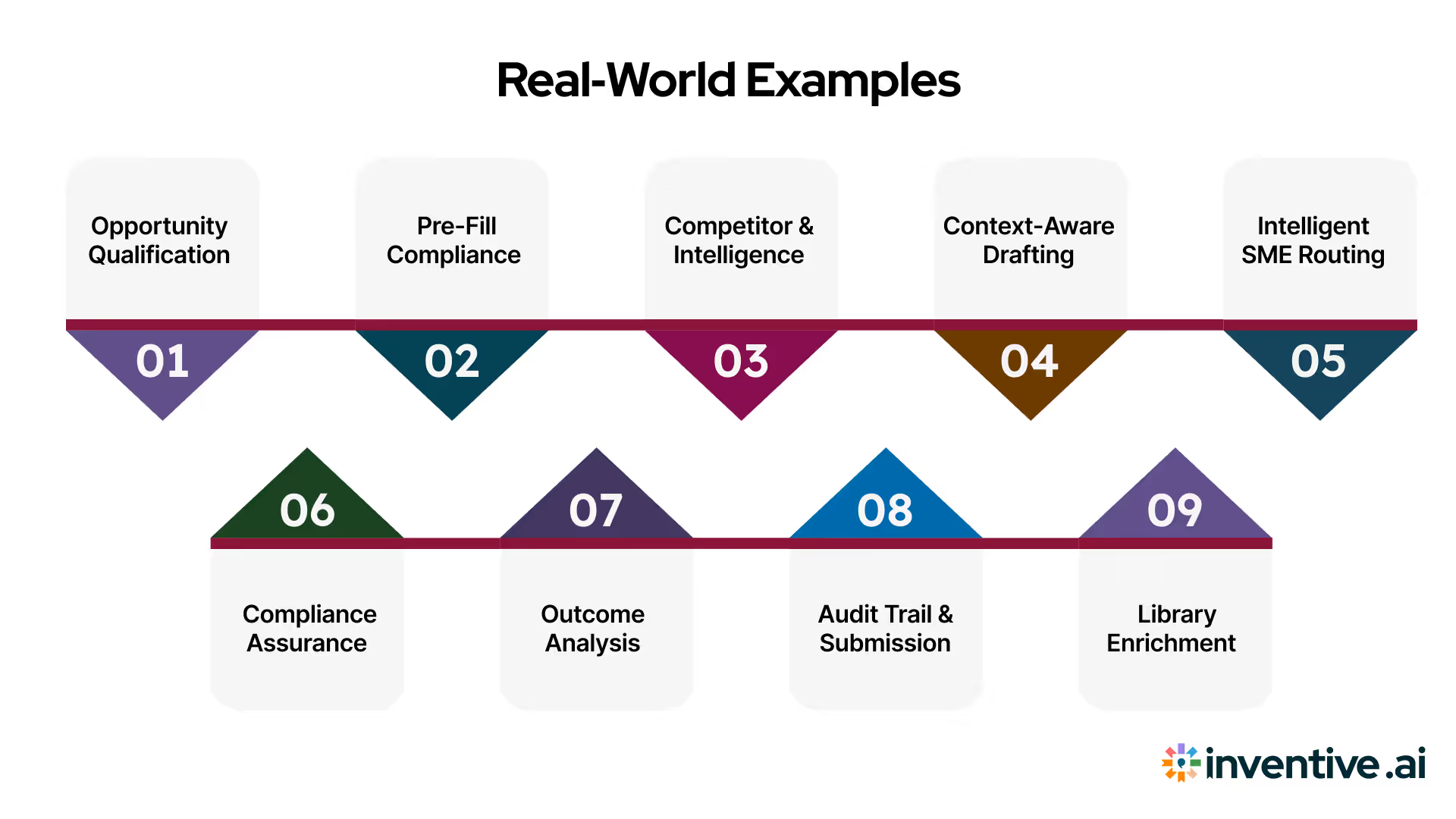

Real‑World Examples of AI‑Powered Tender Automation

The world is moving through distinct AI phases, and more organizations are now seeing measurable results. In fact, 66% of teams already using AI agents say they’re delivering clear value by increasing productivity.

In the context of tender responses, this trend carries even more weight. According to McKinsey, agents have the potential to automate complex business processes, combining autonomy, planning, memory, and integration, to shift generative AI from a reactive tool to a proactive, goal-driven virtual collaborator.

These exact capabilities, autonomous reasoning, task orchestration, and system integration, are foundational to winning tenders at scale.

Below, we’ve curated real-world examples of how organizations are implementing AI automation into tender responses. These use cases show how agentic systems can bring speed, structure, and precision to even the most chaotic or manual workflows.

Pre-RFP Phase

The pre-RFP window is often overlooked, but it’s where the best teams win or lose. AI can help you proactively shape deals, choose the right opportunities, and prepare assets before the RFP even drops.

1. Opportunity Qualification at Scale

Many teams still rely on manual Go/No-Go decisions, circulating spreadsheets, chasing SME input, and debating vague fit scores. It’s slow, inconsistent, and often gut-driven.

AI agents replace this with fast, evidence-based qualification.

By integrating with your CRM, past bid data, and eligibility criteria, agents can:

- Auto-score opportunities based on predefined success indicators

- Flag likely low-fit bids based on historical performance, sector misalignment, or capacity constraints

- Pre-fill compliance matrices to expose blockers early

Instead of starting from zero, your team gets a clear risk-return view on each opportunity, within hours, not days. This enables faster prioritization and reduces the noise of unqualified bids.

2. Pre-Fill Compliance and Credential Matrices

Before the full draft even begins, compliance matrices are often requested by buyers and mishandled by vendors. Manually digging up ISO certificates, registration details, DEI stats, or insurance docs eats hours.

AI agents can retrieve, verify, and format these documents automatically. They:

- Pull the latest documents from your internal systems or DMS

- Match line items to credential types

- Flag missing, expired, or client-incompatible entries

- Populate buyer templates with minimal human input

By the time the RFP team begins drafting, credentialing is already complete, or gaps are already escalated.

3. Competitor and Buyer Intelligence Briefings

Rather than waiting for someone to read the tender and Google the buyer, agents can proactively generate strategic briefings. They:

- Summarize buyer preferences from previous awards and public records

- Extract competitor pricing or win patterns from procurement archives

- Suggest differentiators based on known buyer pain points

This ensures your team enters with situational awareness baked in, not cobbled together hours before submission.

During the Tender Response Phase

Once the opportunity goes live, pressure mounts. Timelines shrink, reviewers multiply, and every missed dependency or unclear task starts compounding delays. This is where RFP agents earn their place, not by replacing writers, but by accelerating coordination, surfacing reusable insights, and helping teams stay focused on high-value work.

Let’s look at how agents streamline this high-stakes phase across three core areas:

4. Context-Aware Drafting and Content Matching

The core challenge during drafting isn’t just speed; it’s relevance. Teams waste time sifting through libraries filled with legacy content that’s either outdated, inconsistent, or irrelevant to the current buyer’s priorities.

AI agents solve this by operating like intelligent research assistants that understand both the ask and the context.

Agents can:

- Parse the full question thread and accompanying documents to interpret buyer intent, not just keywords.

- Surface high-performing past responses based on topic, tone, and win rate.

- Automatically rewrite retrieved content to reflect the current bid’s requirements, using tone-shifting and scope-adjustment techniques.

- Auto-populate common boilerplate sections like bios, certifications, and technical specs without manual copy-paste.

Writers move from blank pages to near-complete drafts in minutes, ready for SME review instead of being stuck at square one.

5. Intelligent SME Routing and Real-Time Collaboration

Most proposal delays stem from SME bottlenecks, either due to unclear ownership, missed deadlines, or disorganized collaboration.

AI agents prevent this by acting as responsive project managers embedded within your RFP stack.

Here’s how they contribute:

- Identify the correct SME based on tagged expertise, past contribution history, and current bandwidth.

- Auto-assign questions with due dates and context, directly in the SME’s preferred workspace (Slack, Teams, or email).

- Monitor task progress and issue smart nudges or auto-reminders, escalating only when deadlines are at risk.

- Maintain a live task board showing ownership, dependencies, and blockers, reducing time wasted on status checks.

This reduces reliance on long email threads and ensures SMEs stay focused on content, not coordination.

6. Compliance Assurance and Risk Flagging

Many teams realize compliance gaps too late, after the first draft is built, or worse, after submission. AI agents bring compliance checks to the drafting stage, making issues visible before they become liabilities.

They function as embedded compliance reviewers within your authoring process:

- Cross-reference each requirement against your controlled documents and highlight mismatches instantly.

- Detect outdated or expired files (e.g., policies past revision, lapsed certifications) and flag for urgent review.

- Surface missing evidence or red-flag language that could raise buyer objections (e.g., vague controls, incomplete procedures).

- Suggest stopgap content (like disclaimers or placeholders) with notes for legal/exec review, so you never miss an input due to time.

This shifts compliance from a final checklist to an always-on safeguard embedded in your workflow.

Automation at Post-Tender Phase

Most teams treat RFPs as isolated sprints; cross the deadline, close the file, and move on. But real maturity comes in the post-tender phase, where performance data, feedback loops, and team insights turn isolated wins into repeatable outcomes.

This is where automation shifts from productivity to strategy. Agents capture hidden signals: Which content led to scoring gains? Where did reviewers hesitate? Which SMEs were overloaded or underutilized? Over time, these signals build institutional knowledge that shortens future cycles, reduces risk, and improves your win probability.

Let’s explore three high-leverage use cases where agents create enduring value after submission:

7. Outcome Analysis and Win/Loss Insight

Most teams don’t systematically analyze their losses, and when they do win, they rarely study why. Over time, this narrows growth and limits the ability to course-correct.

AI agents treat every submission as data:

- Compare winning and losing responses to pinpoint language, format, or evidence gaps

- Correlate evaluation feedback with submission structure, tone, and completeness

- Cluster past wins by vertical, buyer type, or solution category to refine positioning

- Feed insights back into the response library for future retrieval

This closes the loop, so the next response starts smarter than the last.

8. Audit Trail and Submission Governance

Tracking what was sent, when, and by whom is critical, especially for regulated industries or long-term frameworks. But manual archiving leads to gaps and inconsistencies.

AI agents ensure every response is logged, traceable, and review-ready:

- Capture version history and decision rationale across the drafting process

- Index attachments, policies, and certifications used in each submission

- Generate audit-ready summaries aligned to buyer criteria and internal governance needs

- Enable rapid retrieval of past bids based on filters like region, product, or submission date

With agents handling audit trails, teams stay protected and organized, even across high volumes.

9. Library Enrichment from Submitted Bids

The best libraries are built from experience, but content often gets lost post-submission. Many strong responses never make it back into the library, or they do without proper tagging or formatting.

AI agents recover and enrich submitted material automatically:

- Extract high-scoring content from recent wins and tag it by question type

- Update metadata and context based on buyer industry, location, and framework

- Retire outdated or duplicate entries in favor of fresher, more relevant versions

- Structure content into modular, searchable units for easier reuse in future bids

This makes your next bid stronger before you even see the RFP.

Also Read: How to Use AI for Proposal Writing

Implementation Roadmap of AI in Tender Response Automation

Despite widespread interest, fewer than 10% of AI use cases ever scale beyond the pilot phase. Even when deployed, most remain reactive tools supporting isolated steps rather than transforming full workflows.

To realize real performance gains in the agentic era, organizations must shift from fragmented experiments to structured programs. This means embedding AI across end-to-end tender operations, aligning transformation efforts with business-critical processes, and building trust through measured capability development, not just software rollouts.

Here is a step-by-step process to implement AI automation:

Phase 1: Audit & Workflow Mapping

Establish a clear baseline before introducing automation.

- Conduct a comprehensive audit of the end-to-end tender lifecycle, from opportunity intake to post-submission review.

- Map out team roles, process delays, and failure points to uncover automation-ready pain areas (e.g., intake friction, boilerplate reuse, review cycles).

- Identify where AI can create immediate leverage (e.g., auto-surfacing past responses, triaging SME requests, or pre-filling compliance checklists).

- Output should be a clear AI-fit map that aligns with both technical feasibility and business priority.

Phase 2: AI Library Building

Invest in reusable, high-confidence knowledge assets.

- Centralize all validated past RFP responses, proposals, executive summaries, and boilerplate content into a single AI-consumable knowledge base.

- Tag content by domain, response strength, SME author, and evaluator feedback where available.

- Prioritize segments that:

- have high reuse frequency,

- received positive scoring or win feedback, and

- represent institutional knowledge unlikely to be rewritten from scratch.

- Layer access governance and editing controls to ensure version integrity and accountability.

Phase 3: Controlled Pilots

Build credibility through small, measurable wins.

- Launch pilots on 2–3 lower-risk RFPs to validate AI agent behavior in live scenarios.

- Monitor AI performance on key metrics like:

- Drafting quality: fluency, accuracy, and relevance of generated responses

- Retrieval accuracy: whether the correct past content is surfaced in context

- SME satisfaction: trust in suggestions, time saved, and revision burden

- Capture edge cases (e.g., hallucinations, security red flags) and assess triage protocols.

- Use results to define thresholds for broader rollout or AI intervention triggers.

Phase 4: Scaling & Refinement

Industrialize what works and evolve based on real-time use.

- Integrate AI response capabilities into primary productivity suites and RFP platforms (e.g., Google Workspace, MS 365, Loopio, RFPIO).

- Roll out prompt templates tailored by team role (bid manager, SME, reviewer).

- Continuously refine scoring logic based on:

- Reviewer behavior (e.g., edits made)

- Response outcomes (e.g., wins vs. shortlisted)

- Internal ratings of draft relevance and tone

- Operationalize content governance with clear ownership, review cycles, and feedback loops to prevent AI drift or duplication errors.

Implementation should be framed as capability development, not tool installation; each phase must build operational maturity and institutional trust.

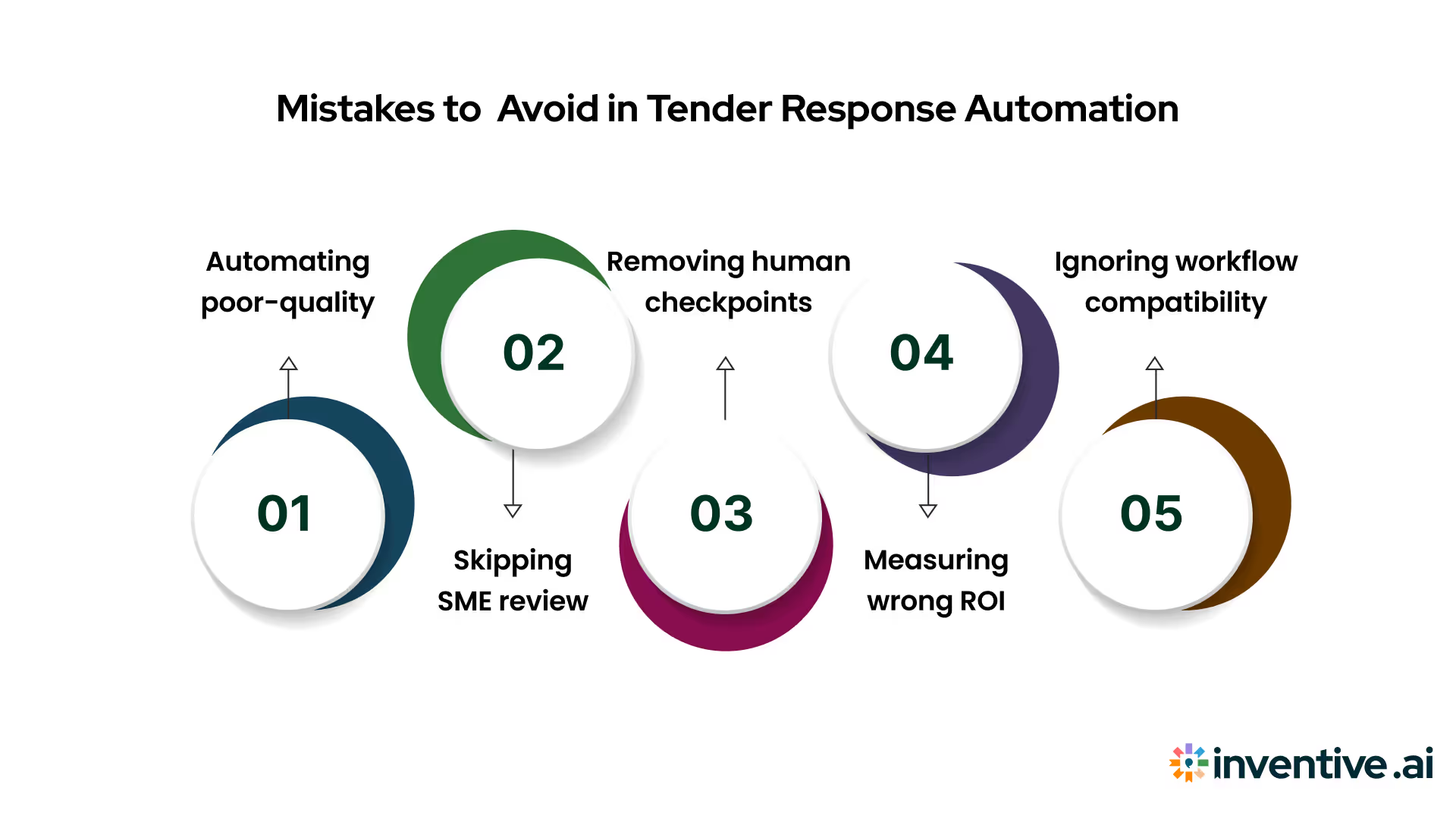

Mistakes to Avoid in Tender Response Automation

Even the most powerful AI tools can backfire if embedded into flawed processes. These aren’t just operational slip-ups; they’re strategic miscalculations that can erode evaluator trust, inflate automation costs, and damage your win rate. Here are five high-impact missteps every bid team must avoid when implementing AI RFP automation:

1. Automating poor-quality content: If your content library is outdated, generic, or inconsistent, AI will amplify those flaws. Automating bad inputs doesn’t improve performance. It scales mediocrity. Always curate and validate response content before applying automation.

2. Skipping SME review on technical answers: AI can draft technically sound-looking responses, but without expert oversight, it risks factual misrepresentation. SMEs must remain involved in reviewing complex, high-stakes sections to preserve trust and accuracy.

3. Removing human checkpoints too early: Eliminating human-in-the-loop safeguards, especially in tenders with nuanced buyer language or bespoke pricing, can leave critical gaps. Automation should support, not replace, human judgment in complex bids.

4. Measuring the wrong ROI: If you only track hours saved, you’ll miss the bigger picture. Bid quality, win rates, and evaluator feedback are stronger indicators of automation’s success than raw time efficiency.

5. Ignoring workflow compatibility: Forcing AI tools into environments where they don’t integrate smoothly (e.g., disconnected from existing CRMs, proposal tools, or content systems) leads to user frustration and low adoption. Match the tech to your team’s actual work rhythms.

How Inventive AI Powers Each Stage of AI RFP Automation

Struggling to turn AI ambition into execution? Here’s how Inventive AI supports your team across each phase of RFP automation, from first setup to fully autonomous responses.

- AI-Powered Drafting Engine: Eliminates blank-page starts by pulling from past proposals, CRM notes, and RFP context to generate tailored responses with citations, in minutes, not days.

- Smart Knowledge Hub: Connects to Google Drive, SharePoint, Notion, and more, organizing your approved content into a single source of truth that’s always up to date and audit-ready.

- Outdated Content Detection: Uses AI to flag low-quality or conflicting entries before reuse, so you don’t risk including stale or non-compliant answers.

- Customer & Competitor Agents: Deliver real-time insights into buyer preferences and rival positioning, helping your team sharpen strategy and speak directly to what matters.

- Collaborative Workflows: Assigns tasks, tracks edits, and syncs with Slack and Microsoft 365, keeping cross-functional teams aligned and accelerating review cycles.

- Control Over Tone and Format: Lets you customize each draft’s voice, structure, and depth, so you hit the right tone whether you’re answering technical specs or crafting an executive summary.

Boost win rates by up to 50% with Inventive AI. Reduce response times, enhance message precision, and close deals more efficiently. Book a demo now.

Conclusion

The true advantage of AI in tendering isn’t speed alone. It’s the ability to embed knowledge into your process, so each response gets sharper, faster, and more aligned with buyer expectations.

As competition intensifies and deadlines become tighter, organizations that scale intelligently will set the standard. Inventive AI provides your team with the systems and clarity to compete at that level on every bid.

Bring order to the chaos of tendering. Inventive AI gives you control, consistency, and speed, without rewriting your entire workflow. Book a Demo Today

FAQs

1. What types of tender responses can be automated with AI?

AI can support a wide range of tender types, RFPs, RFIs, PQQs, compliance questionnaires, and government bids by extracting requirements, suggesting tailored answers, and assembling content from your knowledge base.

2. Does AI replace bid writers or support them?

AI doesn’t replace human expertise; it amplifies it. Tools like Inventive AI reduce admin work, surface relevant insights, and allow bid teams to focus on strategy, tone, and differentiation.

3. How much time can AI actually save in the tendering process?

Teams using AI automation tools report up to 90% faster response times, especially on repetitive sections like company information, case studies, and policy documents.

4. Is AI automation secure enough for sensitive bids?

Yes. Enterprise-grade AI platforms like Inventive AI offer robust data governance, access controls, and secure cloud infrastructure to meet the demands of high-stakes, regulated industries.

5. How long does it take to implement an AI tender automation system?

With guided onboarding and existing content libraries, many teams start seeing results in under 30 days, with workflows tailored to fit your existing processes, not disrupt them.

90% Faster RFPs. 50% More Wins. Watch a 2-Minute Demo.

Understanding that sales leaders struggle to cut through the hype of generic AI, Mukund focuses on connecting enterprises with the specialized RFP automation they actually need at Inventive AI. An IIT Jodhpur graduate with 3+ years in growth marketing, he uses data-driven strategies to help teams discover the solution to their proposal headaches and scale their revenue operations.

Knowing that complex B2B software often gets lost in jargon, Hardi focuses on translating the technical power of Inventive AI into clear, human stories. As a Sr. Content Writer, she turns intricate RFP workflows into practical guides, believing that the best content educates first and earns trust by helping real buyers solve real problems.

.jpeg)