The Ultimate Guide to AI Compliance Questionnaires for Businesses

Ensure responsible AI use with our compliance questionnaire guide, covering model documentation, risk assessments, and human oversight best practices.

Precision defines effective AI governance. It ensures models align with regulatory standards, minimize risk, and earn stakeholder trust. It is the difference between treating compliance as a checkbox and using it as a competitive advantage.

According to the McKinsey Global AI Adoption Survey, 78 percent of global enterprises reported active AI adoption in 2024, a sharp rise from 20 percent in 2020. Yet, inefficiencies persist in the RFP process. Teams still spend an average of 30 to 50 hours responding to a single RFP, often under tight deadlines with fragmented content.

As businesses deploy models across hiring, lending, diagnostics, and supply chain operations, regulators no longer tolerate ambiguity. The stakes have shifted. Poor documentation, biased data sets, opaque logic chains, and unchecked automation no longer reflect speed; they signal negligence.

Compliance can’t remain reactive. It must be integrated into development pipelines, product reviews, and operational workflows. A structured compliance questionnaire for AI transforms broad regulatory principles into practical checkpoints. It brings standardization across teams, embeds accountability into decision cycles, and signals to stakeholders that governance isn’t a mere formality; it’s a design choice.

This article explores how to create and deploy these tools effectively, starting with what AI compliance entails and why questionnaires outperform traditional audits.

TL;DR

- 78% of global enterprises now use AI (McKinsey, 2024), but most lack strong governance. Questionnaires help bridge this gap with structured, repeatable checks.

- AI compliance questionnaires standardize risk evaluation, document the entire model lifecycle, and align with major frameworks like the EU AI Act, NIST AI RMF, and ISO/IEC 42001.

- Tailor questions based on system risk level, involve legal, technical, and compliance teams in the design, and implement version control to track changes and automation to streamline questionnaire distribution and response collection, enhancing scalability and traceability.

- Explainability tools such as SHAP and LIME provide insights into how AI models make decisions by highlighting feature importance and contribution, enabling stakeholders to understand and trust model outputs.

What Is An AI Compliance Questionnaire?

An AI compliance questionnaire is a structured set of questions designed to evaluate how an organization develops, deploys, and manages artificial intelligence systems following regulatory, ethical, and internal governance standards. This tool helps identify potential compliance gaps, assess risks, and ensure responsible AI practices across the enterprise.

Typically part of a broader AI risk management or audit process, the questionnaire covers areas such as data privacy, model transparency, bias mitigation, accountability, human oversight, and alignment with global frameworks, including the EU AI Act, OECD principles, and NIST AI RMF.

As AI systems increasingly influence hiring, lending, healthcare, and logistics, the risks of bias, misuse, and lack of transparency have become real and urgent. Regulators worldwide are responding with strict expectations for how organizations develop and manage AI. This makes AI compliance a non-negotiable business priority.

Why Questionnaires Work for AI Compliance

According to IBM’s 2025 AI Governance Report, 87% of executives claim their organizations have clear AI governance frameworks; however, fewer than 25% have fully implemented tools to manage risks such as bias, transparency, and security, often due to challenges like resource constraints, lack of expertise, and evolving regulatory requirements. As organizations scale AI adoption, they face increasing regulatory scrutiny. From data privacy to model transparency, AI governance has become a business-critical priority. A structured AI compliance questionnaire offers a practical way to manage that complexity, turning vague regulations into clear, trackable actions.

Here’s why compliance questionnaires work so well in the AI context:

- Standardizes Risk Assessment: The AI compliance questionnaire framework offers a standardized approach for assessing AI risks. Whether reviewing internal models or third-party tools, it ensures that every deployment meets baseline compliance standards.

- Documents the Full AI Lifecycle: Questionnaires prompt teams to record essential information such as model purpose, training data sources, decision logic, and validation methods. This documentation supports both internal audits and external reporting requirements.

- Promotes Cross-Functional Accountability: AI compliance involves multiple stakeholders, including legal, procurement, product, and data teams. A centralized questionnaire facilitates collaboration and ensures everyone follows the same requirements.

- Reduces Subjectivity in Reviews: Without structured tools, AI-driven reviews often rely on inconsistent judgment. Questionnaires introduce objectivity by applying the same standards to every model and vendor.

- Aligns with Global Standards: AI compliance questionnaire can map to frameworks such as the EU AI Act, NIST AI Risk Management Framework, and ISO/IEC 42001. This makes it easier to meet multi-jurisdictional obligations.

- Supports Continuous Oversight: Compliance is not a one-time task. Questionnaires can be reused across the AI lifecycle, from development to deployment and monitoring, to flag emerging risks and ensure ongoing governance.

- Streamlines Vendor Due Diligence: For organizations using third-party AI systems, these questionnaires simplify vendor evaluation. Suppliers can complete standardized compliance forms during onboarding, enabling teams to identify risks early and respond promptly.

- Builds a Culture of Responsible AI: Embedding an AI compliance questionnaire into everyday workflows reinforces ethical AI development. It helps create a shared understanding that accountability is a core part of innovation.

Also Read: What Are Security Questionnaires and Why They Matter for B2B Vendors

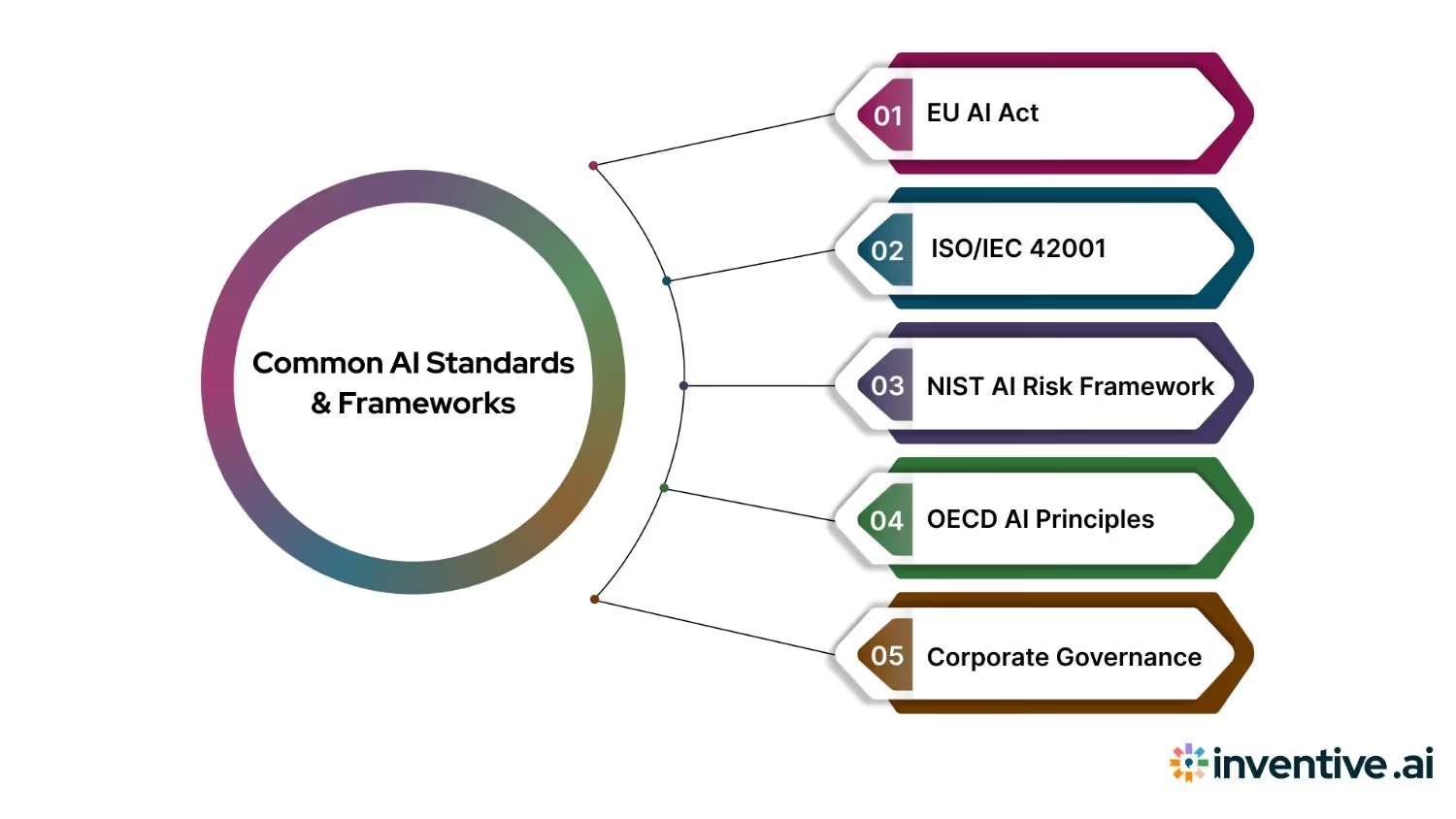

Common AI-Specific Standards or Frameworks

AI compliance draws on a growing set of global frameworks that define 'responsible AI' as systems that are ethical, transparent, fair, and accountable across industries and jurisdictions. These standards serve as the foundation for AI compliance questionnaires, influencing both the questions asked and the depth of supporting evidence required.

Here are some of the most widely recognized frameworks shaping AI governance today:

1. EU AI Act

The EU Artificial Intelligence Act classifies AI systems into four risk categories: unacceptable, high, limited, and minimal. It mandates specific obligations based on that tier. High-risk systems must undergo conformity assessments, maintain detailed technical documentation, and support ongoing monitoring after deployment. This regulation forms the backbone of AI compliance for organizations operating in or serving the EU market.

2. ISO/IEC 42001

This international standard specifies requirements for establishing, implementing, maintaining, and continually improving an AI Management System (AIMS). It supports responsible AI development by embedding accountability, transparency, and lifecycle governance into enterprise operations. ISO/IEC 42001 is especially relevant for organizations seeking certification or alignment with global best practices.

3. NIST AI Risk Management Framework (AI RMF)

Developed by the U.S. National Institute of Standards and Technology, the AI RMF provides a structured approach for managing risks across the AI lifecycle. It emphasizes trustworthiness, including considerations such as privacy, safety, resilience, and explainability. Organizations use it to evaluate and reduce systemic risks in AI design, deployment, and use.

4. OECD AI Principles

These high-level, internationally agreed-upon principles promote the responsible stewardship of trustworthy AI. They focus on values such as fairness, transparency, honesty, and accountability. Although voluntary, the OECD principles significantly influence national policies and are increasingly cited in cross-border compliance discussions.

5. Company-Specific Governance Models

Many organizations supplement external standards with internal governance frameworks tailored to their risk profile, sector, and ethical commitments. These models may include custom assessment templates, escalation workflows, and review boards to evaluate AI use cases. They often set stricter thresholds than those required by law, particularly in sensitive domains such as finance, healthcare, or defense.

Suggested Read: 5 Ways AI Will Transform Proposal Management in 2025

How to Structure the AI Compliance Questionnaire?

An effective AI compliance questionnaire must go beyond surface-level checkboxes. It should dig deep into how AI systems are designed, deployed, governed, and maintained across all functions and vendors. The structure should follow a logical flow that aligns with the AI lifecycle and key compliance pillars such as transparency, accountability, fairness, data privacy, and security.

Here’s a step-by-step breakdown of how to structure the questionnaire:

1. General Information and Context

Start by collecting foundational details that establish context. This helps reviewers quickly understand what the AI system is, who owns it, and how it’s used.

- Project Name & Description: What is the system called? What function does it serve?

- Business Owner: Who in the organization is responsible for this system?

- Deployment Status: Is the system in development, piloting, or live production?

- System Type: Is it rule-based, machine learning, generative AI, LLM, etc.?

- Vendors or Third Parties Involved: List any external tools, APIs, or partners integrated.

2. Purpose and Intended Use

Clarify the purpose and scope of the AI system. This supports risk classification and ensures alignment with ethical guidelines.

- Primary Objective: What decision or action is the AI supporting or automating?

- Target Users: Who interacts with or is impacted by the system (e.g., employees, customers, regulators)?

- Geographies of Use: Which countries or regions is the system deployed in? (Important for regional regulations like GDPR or PDPO)

- Human Oversight: Is there a human in the loop? If so, when and how is oversight triggered?

3. Data Governance

Data governance is crucial for AI compliance because improper data handling can lead to privacy violations, bias, and non-compliance with regulations like GDPR and HIPAA, which mandate strict controls on data sourcing, storage, and consent. This section ensures that data sourcing, handling, and storage practices meet regulatory standards.

- Data Sources: Where does training or input data come from (internal logs, public datasets, licensed sources)?

- Data Type: Is any of the data sensitive or personal (e.g., health, financial, biometric)?

- Anonymization/Pseudonymization: Are techniques used to mask personal identifiers?

- Data Retention Policy: How long is data stored, and under what conditions is it deleted?

- Consent Mechanisms: How is user consent collected, tracked, and managed?

4. Model Development and Testing

This section focuses on how the AI model is built and evaluated to ensure it meets accuracy, fairness, and strength requirements.

- Model Type: What kind of model is used (e.g., supervised learning, NLP transformer)?

- Training Practices: Who trained the model, and using what data?

- Bias Mitigation: What steps were taken to reduce bias (e.g., balanced datasets, fairness constraints)?

- Testing Methodology: How was the model validated, through cross-validation, A/B testing, or simulation?

- Performance Metrics: What KPIs are used (accuracy, precision/recall, F1 score), and what are the results?

5. Transparency and Explainability

AI systems must be explainable to comply with most ethical AI frameworks and regulations.

- Explainability Methods: What tools or techniques are used to interpret model decisions, such as SHAP or LIME, which are methods that help explain how AI models arrive at specific predictions or decisions?

- End-User Communication: Are explanations provided to users? In what format?

- Documentation: Is model documentation available and updated regularly?

6. Risk Assessment and Impact Analysis

Gauge the potential risks the AI system may introduce to individuals, business operations, or public trust.

- Risk Category: Based on impact, does the system fall into a minimal, limited, high, or unacceptable risk class (as per the EU AI Act, for example)?

- Potential Harms: Could the system cause discrimination, financial harm, or misinformation?

- Impacted Stakeholders: Who might be adversely affected, and how?

- Mitigation Strategies: What controls are in place to reduce these risks?

7. Security and Resilience

Focus on cybersecurity and the system’s ability to handle adversarial threats and failure scenarios.

- Security Protocols: Are there controls for data access, model integrity, and endpoint protection?

- Adversarial Testing: Has the model been tested against manipulation or attacks?

- Incident Response Plan: Is there a protocol in case of model failure or breach?

8. Monitoring and Maintenance

AI compliance doesn’t end at deployment. Ongoing governance is key.

- Monitoring Frequency: How often is the model reviewed or retrained?

- Drift Detection: Are there tools to detect concept or data drift?

- Audit Trails: Are decisions logged and traceable for audit purposes?

- Change Management: What is the process for making updates or retiring models?

9. Legal and Regulatory Alignment

Ensure the AI system adheres to relevant laws and industry-specific requirements.

- Applicable Regulations: Does the system fall under GDPR, HIPAA, the EU AI Act, etc.?

- Cross-Border Data Transfers: If data moves between regions, how is compliance ensured?

- Third-Party Compliance: Are vendors contractually obligated to meet your compliance standards?

10. Approval and Sign-Off

The final section should route the questionnaire to key stakeholders for review and approval.

- Compliance Officer Review

- Data Protection Officer Sign-Off

- Business Leader or Product Owner Approval

- Legal Review (if applicable)

Include timestamps, version control, and documentation links for traceability.

You Might Also Like: RFP Management: How AI Is Transforming the Way You Win Deals

Manually answering security questionnaires wastes time and invites inconsistency. Inventive AI uses generative AI and past responses to auto-fill up to 90% of security questionnaires. Its machine learning engine understands context and selects the most accurate responses from your centralized knowledge base, drastically reducing time spent and eliminating repetitive work.

Core Questionnaire Sections and Sample Questions

Each section of the AI compliance questionnaire aligns with a critical area of risk or governance. The goal is to assess not only the technical strength of the system but also the accountability, transparency, and regulatory alignment across the organization. These questions should be tailored to the AI system’s purpose, risk tier, and deployment scale.

1. System Overview

This section establishes a foundational understanding of the system’s scope, ownership, and role within the organization.

- What function does this AI system perform?

Clarify the primary use case (e.g., fraud detection, personalization, predictive maintenance). Include system boundaries and intended outputs. - Who owns deployment responsibility across departments?

Identify accountable roles, such as product owners, ML leads, or department heads. Detail cross-functional responsibilities between tech, compliance, and operations. - What is the intended user base (internal, external, or both)?

Specify whether the system serves employees, customers, vendors, or regulators, and outline relevant access constraints.

2. Data Governance

Focuses on the lineage, quality, legality, and ethical sourcing of training and operational data.

- What datasets train the model?

List all sources, including proprietary datasets, public datasets, and third-party licensed data. Include dataset sizes, formats, and update cycles. - Have you conducted a bias audit on training data?

Indicate whether demographic parity, disparate impact, or fairness-through-awareness audits have been performed. Include audit results if available. - Is user consent captured for personal data ingestion?

Explain consent capture mechanisms, such as cookie banners, opt-in forms, or data processing agreements. Note whether consent is explicit or implied. - Is the data anonymized or pseudonymized before use?

Detailed methods applied (e.g., k-anonymity, tokenization) and associated risk thresholds.

3. Model Design and Risk Controls

This section assesses how risk is categorized and mitigated during model development.

- What risk tier does the system fall under?

Reference your organization’s AI risk classification framework, based on impact on rights, safety, financial exposure, or critical infrastructure. - Has the model undergone adversarial testing, which involves simulating attacks or manipulations to evaluate the model’s strength against malicious inputs or failures?

Adversarial testing involves simulating attacks like input perturbations, model inversion, or poisoning to evaluate the model’s resilience against manipulation and ensure strong security. Detail any simulated attacks used to evaluate strength, such as input perturbations, model inversion, or poisoning attacks. - Are fallback procedures in place for failure modes?

Describe any circuit breakers, default rules, or manual overrides. Include scenarios where these would activate. - Does the model include differential privacy or other protective design choices?

Outline privacy-preserving mechanisms embedded in the model architecture or training process.

4. Explainability and Transparency

Evaluates the model’s interpretability and how results are communicated to various stakeholders.

- Can outputs be explained to non-technical stakeholders?

Describe documentation, visualizations, or tooling (such as dashboards) used for end-user transparency. - Are explainability tools or frameworks used (e.g., SHAP, LIME)?

Specify the tools used and the level of granularity, such as local versus global explanations or feature importance scoring. - Is there a user-facing explanation for automated decisions?

Mention if explanations are integrated into UI/UX (e.g., "why this loan was denied" or "why this product was recommended").

5. Human Oversight

Assess where and how human judgment interacts with automated decisions.

- Is human-in-the-loop oversight active?

Specify checkpoints in the pipeline where humans can validate, override, or supplement the system's outputs. - At what thresholds does human intervention trigger?

Define metrics or business rules that prompt manual review, such as confidence scores, anomaly scores, or risk flags. - Are reviewers trained to interpret model outputs responsibly?

Detailed training or guidelines are provided to human reviewers to reduce cognitive bias or overreliance on AI.

6. Security and Access Controls

Covers the protection of AI assets against unauthorized access and manipulation.

- What measures protect model access and API endpoints, such as encryption, authentication protocols, and rate limiting, to prevent unauthorized use and ensure system integrity?

Include encryption protocols, rate limiting, authentication standards (such as OAuth2), and endpoint obfuscation. - Are logs retained for inference requests?

Explain log retention policies, what is logged (e.g., input/output, user ID), how long it is stored, and who has access. - Is model versioning and change control documented?

Ensure each model update is tracked, with logs indicating the reason for the change and any associated risk assessment.

7. Post-Deployment Monitoring

Assess how the model is governed after it goes live, focusing on performance degradation or unexpected behavior.

- What metrics track model drift?

Include measures such as data distribution shifts, concept drift, or performance KPIs, such as precision or recall variance. - How often does the model undergo performance reviews?

Mention audit frequency (quarterly, monthly) and whether evaluations are manual, automated, or hybrid. - Are user feedback loops in place to detect edge cases or harm?

Detail how feedback is captured (e.g., flagging functionality, surveys) and used to update or retrain the model.

8. Legal and Ethical Review

Ensures the AI system aligns with applicable laws and internal values.

- Does the system align with internal AI ethics guidelines?

Map system behavior to organizational principles, such as fairness, accountability, and human agency. - Have legal teams reviewed system documentation?

Include specific regulatory checklists (such as GDPR, CCPA, or the EU AI Act), contract clauses, and records of legal sign-off. - Are DPIAs or algorithmic impact assessments completed?

Detail the outcomes of any formal assessments, particularly in regulated domains such as healthcare, finance, or government.

Also Read: Choosing the Right GenAI RFP Software for Your Team

Best Practices for Using the AI Compliance Questionnaire

The following best practices offer a blueprint for maximizing the impact of your questionnaire. You can use these whether you’re assessing internal teams, third-party vendors, or AI-integrated business units.

1. Define the Scope Clearly Before Distribution: Before rolling out the questionnaire, map out where AI is being used across your organization or vendor ecosystem. This includes predictive analytics, automated decision systems, natural language tools like chatbots, and machine learning models.

Tip: When deploying diagnostic AI, a healthcare organization should create distinct sections for internal clinical tools, patient-facing apps, and vendor-provided platforms. Each category may involve different compliance frameworks, such as HIPAA or GDPR, so that a one-size-fits-all questionnaire won't capture the nuance.

2. Customize Questions Based on Risk Profiles: Not every AI system carries the same level of risk. Tailor the depth and complexity of your questions based on how the AI system impacts decisions, users, or sensitive data.

Tip: An AI model used for internal document sorting may only need basic questions on functionality and oversight. In contrast, an AI used to approve or reject loan applications should trigger a more comprehensive questionnaire that covers algorithmic bias, data lineage, explainability, and human review mechanisms.

3. Align Questions with Applicable Regulations: Ensure that your questionnaire reflects the legal and regulatory obligations relevant to your operating markets and industry. Different jurisdictions have different requirements around consent, transparency, risk classification, and oversight.

Tip: Under the EU AI Act, high-risk systems are required to document risk mitigation, human-in-the-loop design, and logging. Your questionnaire should ask vendors operating in the EU if these requirements are met and request supporting documentation if not.

4. Involve Cross-Functional Stakeholders in Development: Developing the questionnaire in isolation leads to blind spots. Collaboration ensures the questionnaire covers legal, technical, operational, and ethical aspects.

Tip: Legal teams can ensure data privacy questions align with regional laws. IT or AI specialists can verify that terminology around model training or system architecture is accurate. Procurement can ensure the language is vendor-friendly without diluting compliance rigor.

5. Use Clear, Non-Technical Language Where Appropriate: Complex questions reduce response quality, especially when the questionnaire is sent to business users or vendor account managers. Keep language simple and provide definitions where needed.

Tip: Instead of asking, “Does your system utilize unsupervised neural architectures?”, you could ask, “Does your system learn patterns from data without predefined labels? (e.g., clustering or anomaly detection)”, and include a tooltip explaining the relevance.

6. Automate Routing, Tracking, and Reminders: Use automation tools to route the questionnaire to the right contacts, track progress, and follow up with non-responders. This ensures speed, consistency, and accountability.

Tip: A SaaS company might integrate the questionnaire into its procurement platform. It can be triggered automatically during vendor onboarding. Automated workflows can then escalate pending responses to the compliance team after a set period.

7. Maintain Version-Controlled Records of Responses: Compliance requires that responses be auditable. Store every completed questionnaire with version history, timestamps, and responder details. This allows you to track changes over time and spot emerging risk trends.

Tip: A company using a vendor’s AI chatbot may request updated questionnaires after each major product release. Comparing versioned responses helps identify whether the vendor has improved transparency, added human oversight, or addressed flagged biases.

8. Integrate Questionnaire Results Into Risk Scoring Frameworks: Treat questionnaire responses as structured data that feed into broader risk metrics. Assign weights to certain answers and generate risk scores that can guide mitigation strategies.

Tip: If a vendor reports using sensitive personal data without encryption or retention policies, their score could exceed your acceptable threshold. This could trigger a remediation plan or escalation to legal for further review.

9. Follow Up With Deeper Assessments When Needed: The questionnaire serves as a screening tool, not the final step in the process. Use red flags or gaps in answers to trigger deeper reviews, audits, or interviews.

Tip: If a vendor claims their AI does not require fairness testing because it doesn’t use personal data but fails to define what qualifies as “personal,” you may need a legal and technical review to validate this claim and request a data protection impact assessment (DPIA).

10. Review and Update the Questionnaire Regularly: As AI systems evolve and regulations change, your questionnaire must stay current. Schedule regular reviews to keep the content aligned with both internal changes and external developments.

Tip: With the release of new NIST AI risk management guidelines or updates to the EU AI Act, update your questionnaire to reflect emerging concepts, such as systemic risk, strength, or continuous monitoring.

Suggested Read: AI Procurement Trends: How AI is Transforming the RFP Workflow

Inventive AI: Simplifying Questionnaire Response with Automation

Today, responding to questionnaires isn't just a matter of ticking boxes, it's about efficiently managing the process while ensuring accuracy. Traditional methods can be slow and prone to errors, wasting valuable time and resources. Inventive AI transforms this by automating the creation of accurate, context-driven responses, making it easier for teams to handle these demands with less effort and more precision.

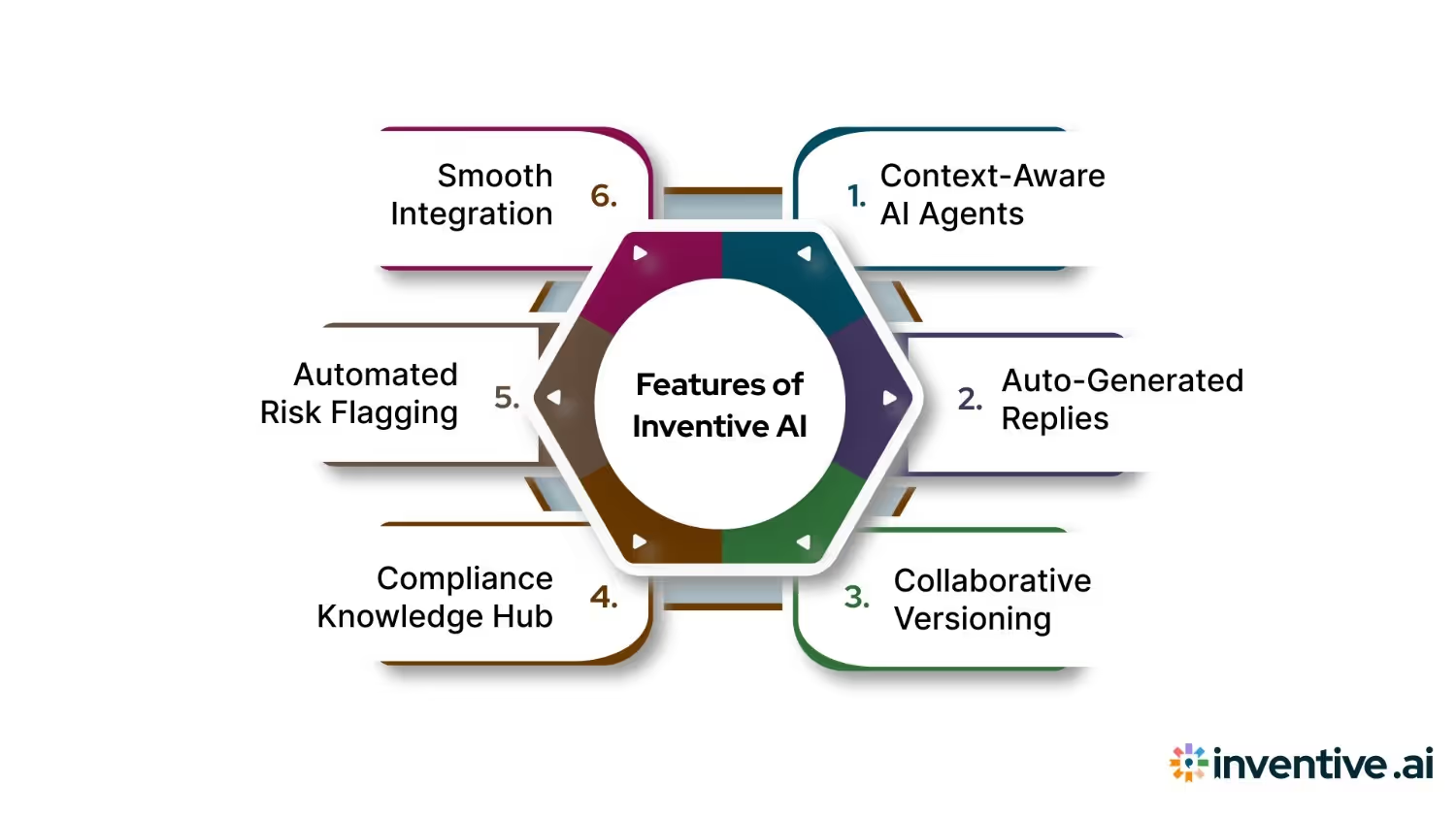

Key Features of Inventive AI:

- AI-Powered Draft Responses: Inventive AI streamlines the process by automatically generating initial responses using your existing data and approved content. This speeds up response times by up to 10x, allowing teams to focus on more strategic tasks while ensuring accuracy in the answers they provide. For decision-makers, this means faster turnaround times and improved efficiency.

- Centralized Knowledge Hub: All necessary documents, including past questionnaire responses, policies, and certifications, are stored in one easy-to-access hub. This eliminates the need to search across multiple platforms, ensuring that the most up-to-date and relevant information is always within reach. For proposal managers and the sales team, it results in less time spent searching for documents and more streamlined workflows.

- Content Management: Inventive AI actively monitors the content used in responses, flagging outdated or conflicting information before it's submitted. This ensures that your responses are always based on the most accurate and current data available. For decision-makers, it means reduced risk of errors and fewer last-minute revisions, saving both time and effort.

- Collaborative Workflow: Inventive AI supports team collaboration by allowing multiple people to work together on a single response. It tracks progress, assigns tasks, and manages revisions in one platform, reducing bottlenecks and ensuring deadlines are met. For managers, this boosts efficiency, fosters better communication, and ensures that no details are overlooked.

- Transparent Source Tracking: Full visibility into where each response comes from provides an easy-to-follow audit trail. This ensures transparency and accountability, making it simpler for decision-makers to manage responses across teams. It also helps organizations stay prepared for audits or inquiries, giving executives peace of mind knowing that their documentation is organized and accurate.

Inventive AI bridges this gap with AI-powered compliance questionnaires that streamline completion, reduce risk, and improve the quality of data captured. It’s not just about faster answers; it’s about smarter ones.

By automating critical steps in the security questionnaire process, Inventive AI’s AI-Powered Security Questionnaire Software helps your team reclaim hours of manual effort, minimize human error, and produce audit-ready responses with greater speed. Acting as your AI assistant, it delivers up to 10x faster completion times and enhanced accuracy in security assessments, revolutionizing how enterprises handle vendor risk and compliance at scale.

FAQs

1. What is an AI Compliance Questionnaire?

Ans. An AI Compliance Questionnaire is a structured tool that helps organizations assess whether their AI systems align with legal, ethical, and operational standards. It typically covers topics like data governance, algorithmic transparency, bias mitigation, and regulatory readiness.

2. Who should fill out the AI Compliance Questionnaire?

Ans. Stakeholders involved in AI development, deployment, or oversight, such as data scientists, compliance officers, legal teams, and product managers, should collaborate to complete the questionnaire accurately.

3. Why is completing the questionnaire important before deploying an AI system?

Ans. It helps identify compliance gaps early in the lifecycle, reducing legal and reputational risk. It also ensures that the AI solution adheres to internal policies and evolving global regulations, such as the EU AI Act or U.S. state-level privacy laws.

4. What areas does the questionnaire typically cover?

Ans. Most questionnaires assess key domains, including data sourcing and quality, model explainability, user consent, risk assessments, bias detection, auditability, and incident response protocols.

5. How often should the AI Compliance Questionnaire be updated?

Ans. Review and update the questionnaire at key checkpoints such as before launch, after major updates, and at regular intervals (for example, annually). Frequent reviews ensure continued alignment with regulatory changes and internal risk frameworks.

90% Faster RFPs. 50% More Wins. Watch a 2-Minute Demo.

Understanding that sales leaders struggle to cut through the hype of generic AI, Mukund focuses on connecting enterprises with the specialized RFP automation they actually need at Inventive AI. An IIT Jodhpur graduate with 3+ years in growth marketing, he uses data-driven strategies to help teams discover the solution to their proposal headaches and scale their revenue operations.

Recognizing that complex RFPs demand deep technical context rather than just simple keyword matching, Vishakh co-founded Inventive AI to build a smarter, safer "RFP brain." A published author and researcher in deep learning from Stanford, he applies rigorous engineering standards to ensure that every automated response is not only instant but factually accurate and secure.

.jpeg)